Nvidia offers sneak peek at Grace CPU Superchip, its 144-core monster

Nvidia hands out new morsels of information on Grace CPU Superchip

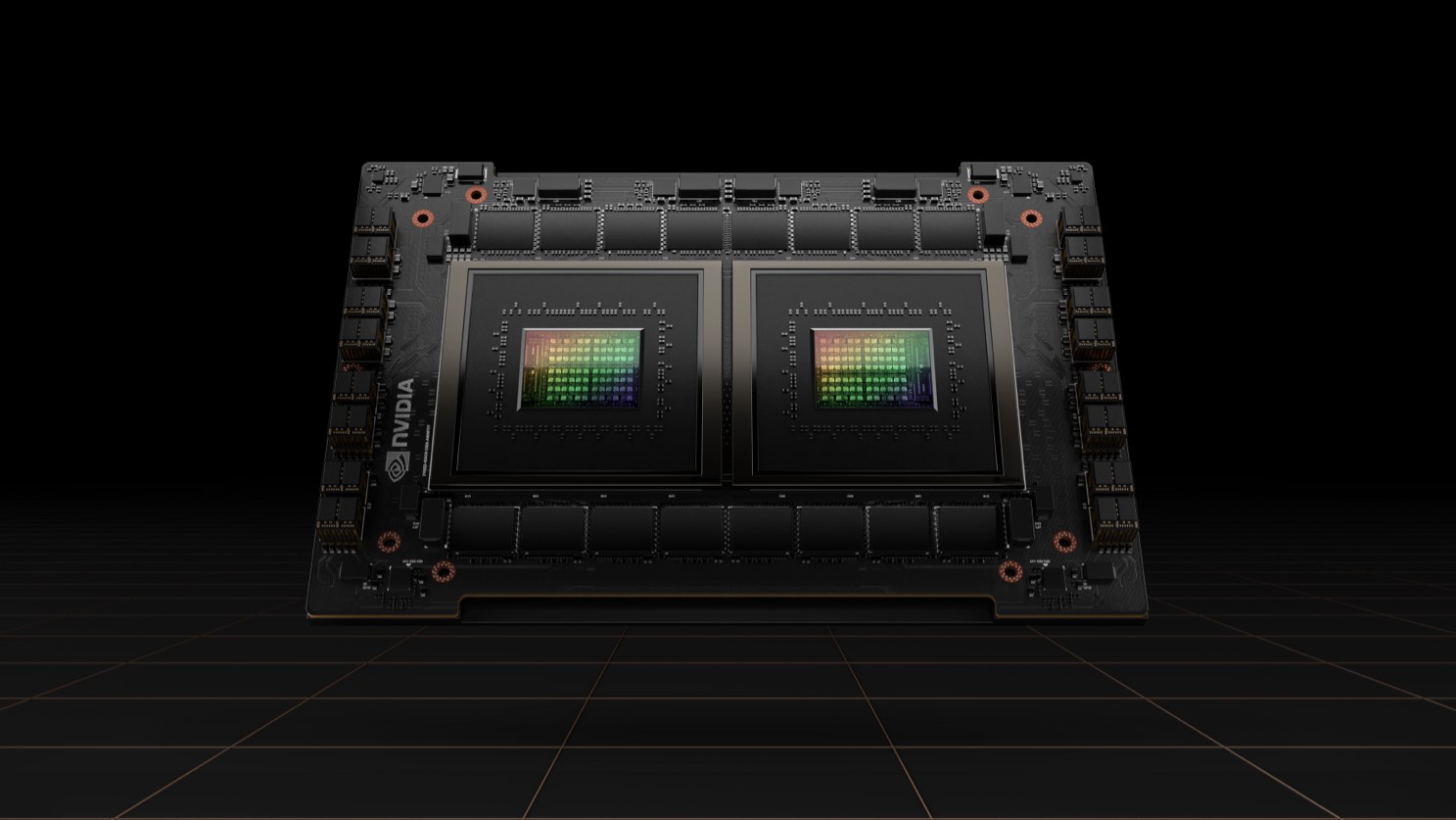

Nvidia has offered up new information about its upcoming Grace CPU Superchip, a monster 144-core server processor built to accelerate AI and HPC workloads.

Ahead of its presentation at Hot Chips 34, the company revealed the processor is manufactured on a specialized version of TSMC’s 4nm process node that’s tuned for the specific characteristics of its products.

Team Green also published power efficiency figures for its NVLink-C2C interconnect (the "bridge" that makes the dual-CPU superchip possible), which is said to consume 5x less power than the PCIe 5.0 interface while delivering up to 900 GB/s of throughput, among other benchmark data.

Grace CPU Superchip

Unveiled at GTC 2022 earlier this year, the Grace CPU Superchip is comprised of two Grace CPUs linked up via high-speed NVLink interconnect, in a similar fashion to Apple’s M1 Ultra.

The result is a 144-core monster with 1TB/s of memory bandwidth and 396MB on-chip cache that Nvidia claims will be the fastest processor on the market for workloads ranging from AI to HPC and more.

“A new type of data center has emerged - AI factories that process and refine mountains of data to produce intelligence,” said Nvidia CEO Jensen Huang, when the new chip was first announced.

“The Grace CPU Superchip offers the highest performance, memory bandwidth and Nvidia software platforms in one chip and will shine as the CPU of the world’s AI infrastructure.”

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Although it remains unclear when the new superchip will be widely available, Nvidia recently revealed it will feature in a range of new pre-built servers launching in the first half of 2023. The new systems - from the likes of Asus, Gigabyte, Supermicro and others - will be based on four new 2U reference designs teased by Nvidia at Computex 2022.

The four designs are each specced out to cater for specific use cases, from cloud gaming to digital twins, HPC and AI. Nvidia says the designs can be modified easily by partners to “quickly spin up motherboards leveraging their existing system architectures”.

The entrance of Nvidia into the server market will further accelerate the advance of Arm-based chips, which are expected to eat into the share of x86, the architecture on which Intel Xeon and AMD EPYC chips are based.

The adoption of Arm-based chips in the data center has also been driven by the development of custom Arm-based silicon among cloud providers and other web giants. The Graviton series developed by AWS has proven to be a great success, Chinese firm AliBaba is working on a new 128-core CPU, and both Microsoft and Meta are rumored to be developing in-house chips too.

“A lot of startups have attempted to do Arm in the data center over the years. At the beginning, the value proposition early on was about low power, but data center operators really care about performance. It’s about packing as much compute as possible into a rack,” Chris Bergey, SVP Infrastructure at Arm, told TechRadar Pro earlier this year.

“With Arm, cloud providers are finding they can get more compute, because they can put more cores in a power envelope. And we’re just at the tip of the iceberg.”

- Strip away the virtualization layer with the best bare metal hosting

Via Tom’s Hardware

Joel Khalili is the News and Features Editor at TechRadar Pro, covering cybersecurity, data privacy, cloud, AI, blockchain, internet infrastructure, 5G, data storage and computing. He's responsible for curating our news content, as well as commissioning and producing features on the technologies that are transforming the way the world does business.