Smartwatches could use ultrasound to sense hand gestures

Seeing your intentions in your forearms

Interaction designers love hand gestures. They're simple, generally pretty easy to learn, and intuitive. That makes them very handy for devices that lack sizeable screens, like smartwatches and other wearables.

But it's not simple to develop a foolproof way for a device to recognize those gestures. Accelerometers can help, but they're not very precise unless distinct and expansive gestures are made – which most users won't want to do in public.

Enter a team of researchers from the Bristol Interaction Group at the University of Bristol in the UK. They believe that ultrasonic imaging, as used in medicine and pregnancy scans, could hold the key to understanding hand movement.

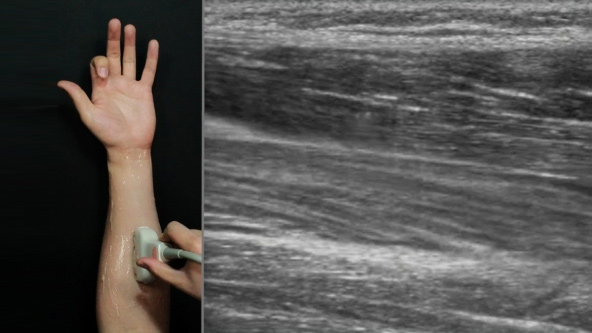

By using a handheld ultrasound scanner and a collection of image processing algorithms and machine learning techniques, they were able to classify different muscle movements within the forearm as different gestures.

- The Amazon deals to look out for on Black Friday in the UK

- The Amazon deals to look out for on Black Friday in the US

High recognition accuracy

In testing, the system showed high recognition accuracy and – importantly – the ability to deliver results while positioned at the wrist, which is handy because that tends to be where people wear smartwatches.

The only problem is that ultrasound devices are still somewhat large and bulky, meaning that a lot of miniaturization work will need to be done to develop a workable prototype.

"With current technologies, there are many practical issues that prevent a small, portable ultrasonic imaging sensor integrated into a smartwatch," said Jess McIntosh, a PhD student who worked on the research.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

"Nevertheless, our research is a first step towards what could be the most accurate method for detecting hand gestures in smartwatches."

The team published its results in the Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems.