The technology behind CERN: the hunt for the Higgs boson

How software is helping The Large Hadron Collider

On a typical day, Dr Fabrizio Salvatore's routine goes something like this: coffee and a short briefing for two PhD candidates who work within his department at the University of Sussex, a leafy campus Brighton.

After that, there's a similar, slightly longer guidance session with a couple of post-grad researchers, and then it's on to his other teaching duties. So far, so typically academic.

When he gets time for his own research, Dr Salvatore will begin by loading a program called ROOT onto his Ubuntu-powered laptop. ROOT is the go-to software framework for High Energy Physics (HEP) and particle analysis.

Once a week, he'll dial into a conference call to talk with collaborators on research projects from around the world. On a really good day - perhaps once in a lifetime - he'll sign a paper that finds near-certain evidence of the existence of the Higgs boson, the so-called God particle, which gives all other particles mass and thus makes reality what it is.

Dr Salvatore, you see, is one of the 3,300 scientists who work on ATLAS, a project which encompasses the design, operation and analysis of data from the Large Hadron Collider (LHC) at the world-famous Swiss laboratory, CERN.

"The first time I went to CERN, in 1994," says Dr Salvatore, "it was the first time that I had been abroad. My experience there - just one month - convinced me that I wanted to work in something that was related to it. I knew I wanted to do a PhD in particle physics, and I knew I wanted to do it there."

In June this year, scientists from ATLAS and their colleagues from another CERN experiment, CMS, announced they had found probable evidence of the Higgs boson, an important sub-atomic particle whose existence has been theorised for half a century but has never been observed. The news was hailed by none other than Professor Brian Cox as "one of the most important scientific discoveries of all time".

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

And - putting aside the small matter of building the LHC itself - finding the Higgs was done almost entirely with Linux. Indeed, many of the scientists we've spoken to say it couldn't have been done without it.

The level of public interest in CERN's work isn't surprising. It's impossible not to get excited by talk of particle accelerators, quantum mechanics and recreating what the universe looked like at the beginning of time, even if you don't understand what concepts such as super-symmetry and elementary particles really mean.

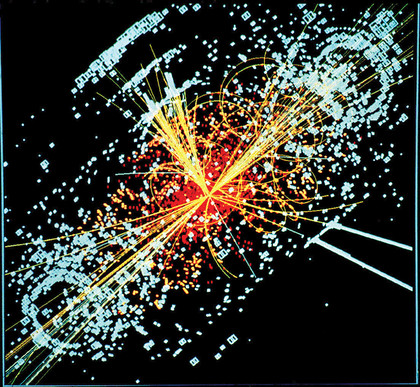

Inside LHC, protons are fired towards each other at velocities approaching the speed of light. They fly around 17 miles of indoor racetrack underneath the Franco-Swiss Alps, and the sub-atomic debris of their collisions is recorded by one or more of the seven detector sites dotted around the circumference of the ring, of which ATLAS and CMS are just two.

And the incredible physics of smashing protons together is just the start of the work; it's what happens afterwards that requires one of the biggest open source computing projects in the world.

Experiments, or 'events', within LHC produce a lot of information. Even after discarding around 90% of the data captured by its sensors, original estimates reckoned that LHC would requrie storage for around 15 petabytes of data a year. In 2011, LHC generated around 23 petabytes of data for analysis, and that figure is expected to rise to around 30PB for 2012, or double the original data budget.

This winter, the accelerator will be closed down for 20 months for repairs and an upgrade, which will result in even more data being recorded at the experiment sites.

These figures are just what's being produced, of course: when physicists run tests against results they don't just work with the most recent data - an event within the collider is never taken in solus, but always looked at as part of the whole.

Gathering data from the LHC, distributing it and testing it is a monumental task. "The biggest challenge for us in computing," explains Ian Bird, project lead for the Worldwide LHC Computing Grid "is how we're going to find the resources that experiments will be asking for in the future, because their data requirements and processing requirements are going to go up, and the economic climate is such that we're not going to get a huge increase in funding."

To put this into some context, though, Google processes something in the region of 25PB every day. What Google isn't doing, however, is analysing every pixel in every letter of every word it archives for the telltale signature of an unobserved fundamental particle.

"What we do is not really like what other people do," says Bird. "With video downloads, say, you have a large amount of data, but most people probably only watch the top ten files or so, so you can cache them all over the place to speed things up.

"Our problem is that we have huge data sets, and the physicist wants the whole data set. They don't want the top four gigabytes of a set, they want the whole 2.5PB - and so do a thousand other researchers. You can't just use commercial content distribution networks to solve that problem."

Paulo Calafiura is the chief architect of the ATLAS experiment software, and has been working on the project since 2001. Perceptions of its scale have changed a lot in that time. "We used to be at the forefront of 'big data'," Calafiura says.

"When I first said we would buy 10PB of data a year, jaws would drop. These days, Google or Facebook will do that in any of their data centres without breaking a sweat. In science, we are still the big data people though."

A coder by trade, Calafiura has a long background in physics work. Prior to ATLAS, he helped to write the GAUDI Framework, which underpins most High Energy Physics (HEP) applications, particularly those at CERN.

The GAUDI vision was to create a common platform for physics work in order to facilitate collaborations between scientists around the world. Prior to GAUDI, Calafiura explains, most software for analysis was written on a generally ad hoc basis using FORTRAN commands. By transitioning to a common object-oriented framework for data collection, simulation and analysis using C++, the team Calafiura worked for laid the foundations for the huge global collaborations that underpin CERN's work.

"The GAUDI framework is definitely multi-platform," says Calafiura. "In the beginning, ATLAS software was supported on a number of Unix platforms and GAUDI was - and is still - supported on Windows. Some time around 2005, we switched off a Solaris build [due to lack of interest], and before that a large chunk of the hardware was running HPUX. But the servers moved to Linux, and everyone was very happy.

"Right now," Calafiura continues, "we're a pure Linux shop from the point of view of real computing and real software development. There's a growing band of people pushing for MacOS to be supported, but it's done on a best effort basis."

According to Calafiura, Apple notebooks are an increasingly common sight among delegates at HEP conferences, but it's rare to spot a Start orb. "With my architect heart," he admits, "I'm not happy that we're pure Linux, because it's easier to see issues if you're multi-platform. But being purely Linux allows us to cut some corners. For example, we were having a discussion about using a non-POSIX feature of Linux called Splice, which is a pipe where you don't copy data, and it increases the efficiency of our computing."

Open-source collaboration

Around 10,000 physicists across the world work on CERN-related projects, two thirds of whom are attached to the largest experiments, ATLAS and CMS. Analysing the data produced by LHC is a challenge on multiple levels.

Getting results out to scientists involves massive data transfers. Then there's the question of processing that data. Reliability, too, is key - if a server crashes halfway through even a 48-hour job, restarting it costs precious time and resources.

The compute and storage side of CERN's work is underpinned by a massive distributed computing project, in one of the world's most widely-dispersed and powerful grids. Exact terminologies and implementations of the WLCG vary between the 36 countries and 156 institutions involved, but essentially it's a tiered network of access and resources.

At the centre of the network is CERN, or Tier 0 (T0), which has more than 28,000 logical CPUs to offer the grid. T0 is where the raw experimental data is generated.

Connected to T0 - often by 10Gbps fibre links - are the Tier 1 (T1) sites, which are usually based in major national HEP laboratories, which act as local hubs. In most cases, the T1s will mirror all the same raw data produced at CERN.

Beneath the T1 sites are the Tier 2 (T2) data centres. As a rule, these will be attached to major universities with the resources to spare space, racks and at least one dedicated member of staff to maintain them. Links between T1 and T2 sites are generally via the national academic network backbones (JANET in the UK), so are high-speed but shared with other traffic.

Not all the research data will be stored locally, rather sub-sets that are of particular interest to a certain institution will be stored permanently, while other data will be fetched from the T1s as required.

The Tier 3 (T3) institutions tend to be the smaller universities and research centres that don't require dedicated local resources, but will download data as required from the T2 network and submit jobs for processing on the grid.

There are several online real-time dashboards for WLCG, so anyone can go in and visualise the state of the network (http://dashb-wlcg-transfers.cern.ch/ui/, https://netstat.cern.ch/monitoring/network-statistics/visual/?p=ge and http://wlcg-rebus.cern.ch/apps/topology are the most comprehensive).

There are nearly 90,000 physical CPUs attached to WLCG at the present time, offering 345,893 logical cores. Total grid storage capacity is over 300PB.

In the UK, the national WLCG project is known as GridPP, and it's managed out of the University of Oxford by Pete Gronbach. According to the network map - gridpp.ac.uk - there are more than 37,000 logical CPU cores attached to GridPP, which act as a single resource for scientists running CERN-based analytics.

"We have constant monitoring of the system to make sure all our services are working properly," Gronbach explains. "If you fail any of the tests, it goes to a dashboard, and there are monitors on duty who look at these things and generate trouble tickets for the sites. There's a memorandum of understanding that the site will operate at certain service levels in order to be part of the grid, so they have to have a fix within a certain time. It's quite professional really, which you may not expect from a university environment. But this is not something we're playing at."

One of the requirements for a T2 site is to have a full-time member of staff dedicated to maintaining the Grid resources, and weekly meetings are held via video or audio conference bridges between system administrators through the WLCG to ensure everything is running correctly, and updates are being applied in a timely fashion throughout the grid.

Crucially, using Linux allows HEP centres to keep costs down, since they can use more or less entirely generic components in all the processing and storage networks. CERN is a huge public investment of more than one billion Euros a year, so it has to deliver value for money. It also means that CERN can sponsor open source software such as Disk Pool Manager (DPM), which is used for looking after storage clusters.

As you might expect from the organisation that gave humanity the world wide web, it's very much aware of the mutual benefits of shared development. "GridPP has evolved over the last 10 years," says Gronbach, "but a lot of the batch systems have remained the same - we use TORQUE and MAUI, which is based on PBS. One or two sites use Grid Engine, but there's less support for that. Things like that have remained fairly constant through the years. Other bits of the software, like the Computing Element, which is the bit that sits between users' jobs coming in and sending them to our batch system, have gone through many generations, and we update it every six months or so."

According to Calafiura, multicore processing has been the single-most important improvement to the grid. "With multicore," he says, "we've been able to use a very Linux-specific trick - which is fork, copy and write - to run eight or 16 copies of the same application by forking it from the mother process and save a factor of two in memory by doing it."

A major upgrade is currently taking place across GridPP to install Scientific Linux 6 (SL6) and the latest version of Lustre for storage file-systems. Scientific Linux is co-maintained by CERN and the US laboratory Fermilab.

At the time of writing, SL6 is running on 99,365 machines. This is slightly lower than the norm, probably because it's the summer vacation in most northern hemisphere countries.

SL6 is a spin of Red Hat Enterprise Linux, chosen for its rock-solid stability. Not all researchers use Scientific Linux, and all the tools vital for CERN work are compatible with other distros - Ubuntu is still a popular choice. But all servers connected to the WLCG run a SL5 or above.

"What we've all got good at over the last 10 years is automating installations and administrating these systems," Gronbach continues. "So we can install systems using Pixiboot, Kickstart, CF Engine or Puppet to reinstall a node quickly and remotely, because the comptuer centres aren't always at the university either."

Keeping hardware generic and using well-established FOSS also makes it easy for more centres to come online and offer resources up to the grid. The University of Sussex became a T2 centre in June. Sussex has been affiliated to the ATLAS project since 2009, when Dr Salvatore and Dr Antolella De Santo joined from Royal Holloway - another T2 - and established an ATLAS group.

Thanks to their work, Sussex has won funding which has enabled Salvatore, Dr De Santo and the university's IT department to add a 12-rack data centre in a naturally-cooled unit with 100 CPUs and 150TB of storage, costing just £80,000.