The immersive audio you've never heard that could revolutionize virtual reality

A bevy of new formats battle it out

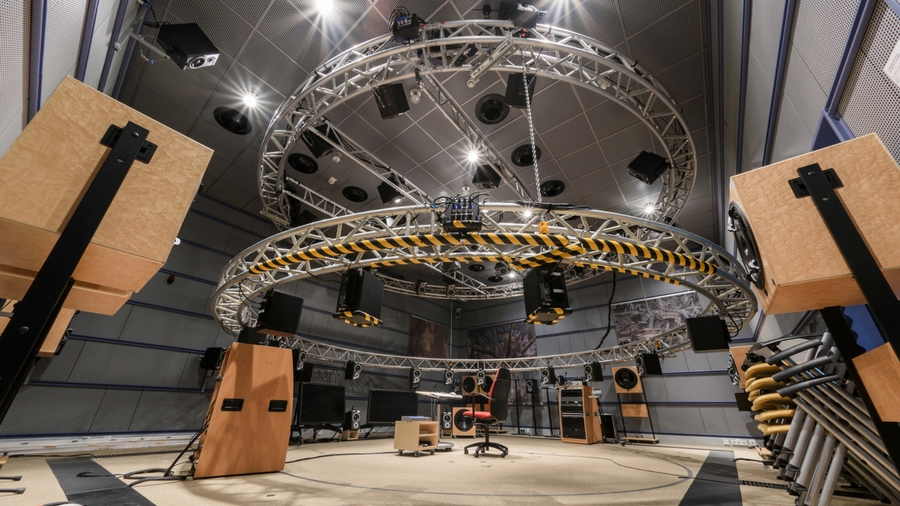

Image credit: Dirac Research

Surround sound is history. It may have been considered cutting-edge a decade ago, but with most music and video now watched on mobile phones, the fight is on for audio … that moves.

Built around a 360-degree sphere, so-called immersive or spatial audio tech is being designed by the likes of Dirac Research, DTS, Dolby and THX primarily for virtual reality (VR) headsets, but who can ignore the world’s 2.5 billion smartphones? The race is on to produce the definitive format for 3D audio.

What is immersive audio?

Designed primarily for VR, but also for mobile devices, immersive audio has three parts to it.

The first is channels; home cinemas use a 5.1 system to handle front, left, right, left rear, right rear, and a subwoofer, and immersive audio is based initially on that same framework. The only difference is that now it can mimic an 11.1 or higher array.

The second part of immersive audio is ambisonics.

“Ambisonic signals are scene-based audio elements that describe not individual sources (like channel-based or object-based formats), but rather the sound field as a whole from one point in space,” says Julien Robilliard, product manager at Fraunhofer IIS, which invented the mp3 and AAC codecs.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Immersive sound can be produced using the head-related transfer function (HRTF), where binaural stereo microphones are placed in the ears of a dummy and external sounds recorded to create a 'head-print' profile (in the future, we might all get sound personalized to the shape of our head and face).

However, binaural sound is merely clever stereo, and best for headphones. For true 360-degree 'ambisonic' audio recordings suited to loudspeakers, microphones capture audio from four different positions.

The third part of immersive audio is audio objects.

An audio object is a mono track accompanied by metadata that specifies the exact position of that sound. “With VR you want to have the sounds that immerse you in the scene that can be reproduced from coming from any direction,” says Robilliard.

Why is immersive audio important?

“The sound in any immersive content experience plays an equally important – and often overlooked – role as the visuals in transporting the viewer into the action," says Canaan Rubin, Director of Production and Content at VR and AR production company Jaunt.

It uses ambisonic microphones fitted onto the surrounding set to authentically capture sound in the round. "In playback of our 360 content, audio technologies such as Dolby Atmos for VR, DTS Headphone:X, and the recently unveiled new version of Dirac VR all offer exclusive audio formats enhanced by HRTFs (head-related transfer functions) to provide a truly 3D sound experience," says Rubin.

Why is HRTF so important?

"Without it, headphone-based audio cannot accurately render sound sources that originate from the top, bottom, front, or back of the subject, leaving your experience limited to the left-right plane," says Rubin. "This can occur due to the proximity of headphone speakers to your eardrum, which negates the physical and psychological effects of hearing sound in a room."

However, there are various different all-important rendering and processing technologies for taking immersive audio to devices – and each of has its own strengths.

Dirac VR explained

Although most of us are familiar with Dolby, DTS and THX, Swedish sound company Dirac Research is a comparatively small, but rapidly growing company.

Fresh from putting its tech inside Xiaomi’s Mi AI smart speaker in early 2018, Dirac used the recent MWC to give TechRadar a demo of the second generation its Dirac VR tech for headphones.

It features sound coming from all directions in a sphere, but its key feature is that it moves as you move your head. That’s crucial because if you wear a VR headset, you need the sound to remain in the same place, which means the everything in a mix changing position in real-time.

This is dynamic positioning, which creates a 360-degree audio sphere where sound moves freely in all directions. It's incredibly impressive.

It can be used, for example, to create a sound stage where the band you're listening to appears to be in front of you. But when you turn your head to the right-hand side, your left ear gets louder. If you tilt your head upwards, the sound moves downwards in the mix. It can also be used to mimic the experience of being in a cinema.

“By fixing sound sources in the horizontal plane, virtual environments such as movie theaters can be recreated with pinpoint accuracy – as both the end-user and the audio sources remain in static locations,” says Lars Isaksson, Dirac Research General Manager & Business Director of AR/VR.

Isaksson continues: “Our second-generation Dirac VR, however, places each user at the center of an ‘audio sphere’, thereby allowing users to experience, for example, the sound of wind whipping as it swirls around one’s head or an airplane arriving and departing on a tarmac.”

However, most critically, Dirac VR has a small CPU and memory footprint, so it works well in small devices like phones.

"While Dirac’s technology is lesser known, it promises highly efficient CPU performance considering the HRTF processing and reverberation engine it contains," says Rubin.

Sound for gamers

Launched at MWC 2018, DTS Headphone:X 2.0 virtualizes stereo sound and transforms it into a surround sound.

It's designed with gamers in mind. The new version includes proximity cues and support for channel-, scene- and object-based audio.

DTS also has DTS:X Ultra, which adds support for ambisonics and audio objects, and critically can be listened to over speakers as well as through headphones; it's aimed at VR and AR gaming.

"What's unique about DTS Headphone:X 2.0 is the way we've written the algorithms, customized the HRTF, and used our vast library of tuning curves from over 400 pairs of headphones,” says Rachel Cruz, Director of Product Marketing for Mobile and VR/AR at Xperi, which owns the DTS brand. “They give a competitive advantage because sometimes it's the audio cue that tells your eyes where to look, and often you get them before a visual cue.”

It’s also a highly customized sound stage. "DTS:X allows the sound of individual objects to be boosted manually if you’re having a hard time hearing a given object, such as dialogue, relative to the rest of the sound stage," says Rubin.

Dolby Atmos for VR, MPEG-H and Cingo

Although it gets a lot of press, Dolby Atmos is technically hard to pin it down because Dolby don’t make the technologies inside it public.

Although it's positioned more to towards traditional surround sound and cinema sound, Dolby Atmos for VR also deals in spatial sound. "Atmos offers auralisation and spatialisation of up to 128 objects simultaneously," explains Rubin.

Germany’s Fraunhofer IIS, known for the mp3, now has a container for handling immersive audio; MPEG-H audio. Although the ‘H’ doesn’t stand for anything in particular, think of it as meaning height.

“This codec supports the delivery of channels, audio objects and ambisonics to TVs, soundbars, as well as mobile and VR devices,” says Julien Robilliard, product manager at Fraunhofer IIS.

MPEG-H has been used in South Korea as part of terrestrial 4K broadcasts since May 2017, and Samsung TVs on sale there can decode it. THX and Qualcomm just demoed its THX Spatial Audio Platform using MPEG-H.

So what happens when a MPEG-H bitstream arrives in a pair of headphones? “That’s where Cingo comes in,” says Robilliard. “It’s a binaural renderer that tricks the brain into thinking that the sounds are going from outside of the headphones.“

However, while Cingo supports rendering of fully immersive 3D audio content with formats that add a height dimension, it’s MPEG-H that’s got the biggest future. “MPEG-H is our core business, and it’s the codec that allows all of these technologies – Dirac, Atmos, Cingo and DTS – to exist,” says Robilliard.

MPEG-H is currently the only codec specified by the VR Industry Forum guidelines, but it’s not just for VR; it can take a mono, stereo, binaural, 5.1, 11.1, right up to a dynamic immersive audio signal to any compatible device.

Though they probably won’t go mainstream until VR headsets begin to sell in bigger numbers, immersive audio formats are only half the story, with MPEG-H destined to play a critical role. Says Robilliard: “If you don’t get the signals into your home, there’s no point in making magic happen.”

This article has been updated, after some clarifications from Fraunhofer IIS.

- Next-generation VR: it's our hands on HTC Vive Pro review

Jamie is a freelance tech, travel and space journalist based in the UK. He’s been writing regularly for Techradar since it was launched in 2008 and also writes regularly for Forbes, The Telegraph, the South China Morning Post, Sky & Telescope and the Sky At Night magazine as well as other Future titles T3, Digital Camera World, All About Space and Space.com. He also edits two of his own websites, TravGear.com and WhenIsTheNextEclipse.com that reflect his obsession with travel gear and solar eclipse travel. He is the author of A Stargazing Program For Beginners (Springer, 2015),