What is a LiDAR scanner, the iPhone 12 Pro's camera upgrade, anyway?

Apple thinks the LiDAR scanner is the iPhone 12's secret weapon

The iPhone 12's camera specs have finally been unveiled - and we now know that the iPhone 12 Pro range is going to be using the new LiDAR scanner on the back. That's right, the same mysterious dot that first appeared on the iPad Pro 2020.

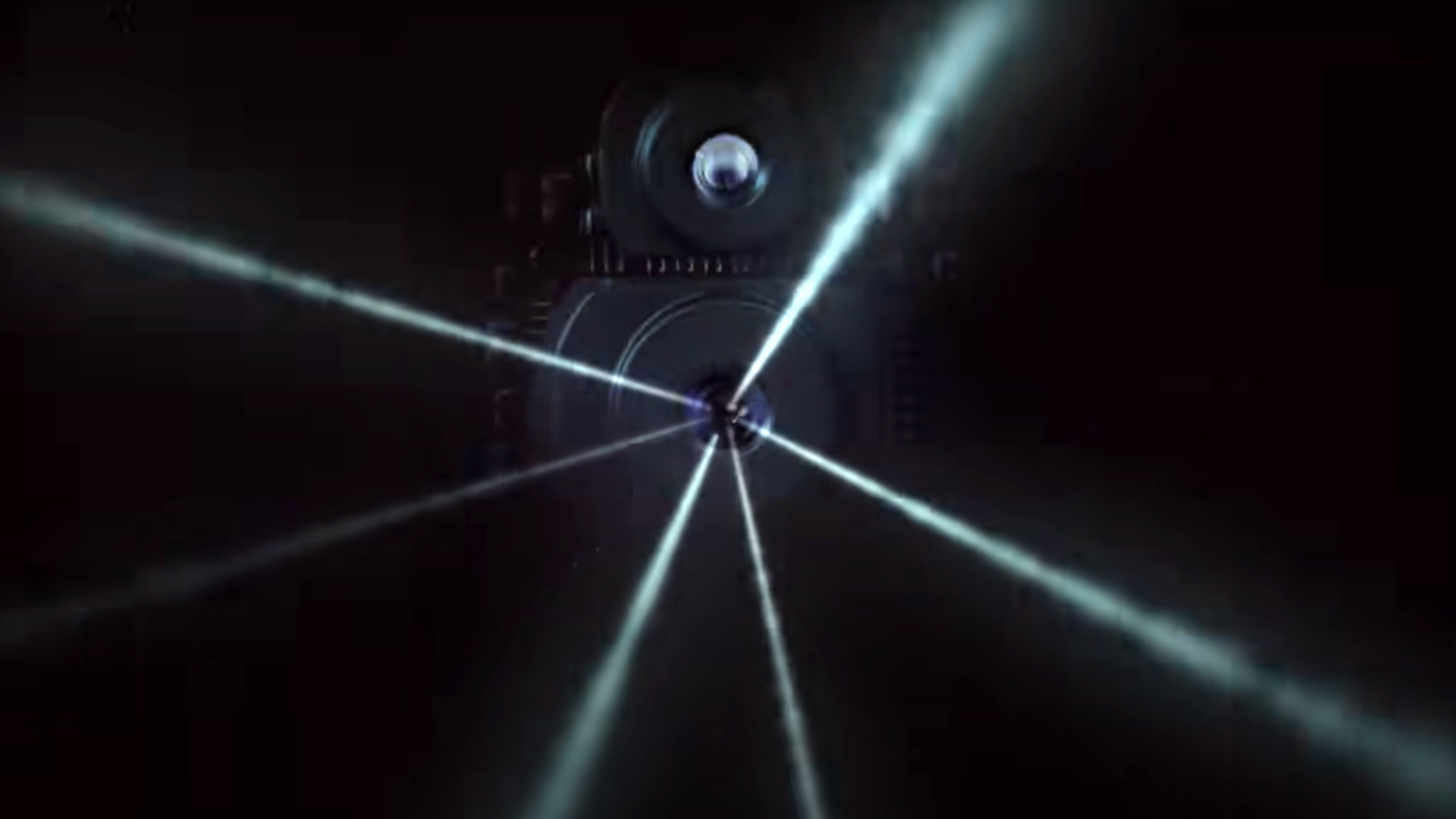

But what is a LiDAR scanner? A built-in lie detector? A more relaxed version of radar perhaps? As we'll discover, LiDAR (or 'Light Detection and Ranging') does work in a similar way to radar, only it uses lasers to judge distances and depth. This is big news for augmented reality (AR) and, to a lesser extent, photography too.

The more interesting question, though, is what LiDAR will let us do on the iPhone 12 Pro. Using our experience of seeing the tech on the iPad Pro 2020, we'll can explore the kind of experiences LiDAR could open up on the new iPhones – and, ultimately, the Apple Glasses.

But first, a quick rewind to the tech's origins, so you can sound smart during your next family Zoom meeting...

What is LiDAR?

The concept behind LiDAR has been around since the 1960s. In short, the tech lets you scan and map your environment by firing out laser beams, then timing how quickly they return. A bit like how bats 'see' with sound waves, only with lasers –which makes it even cooler than Batman's Batarang.

Like most futuristic tech, it started life as a military tool on planes, before becoming better known as the system that allowed the Apollo 15 mission to map the surface of the moon.

More recently, LiDAR (also known as lidar) has been seen on self-driving cars, where it helps detect objects like cyclists and pedestrians. You might have also unwittingly come across the tech in one of the best robot vacuums.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

But it's in the past couple of years that LiDAR's possibilities have really opened up. With the systems getting smaller, cheaper and more accurate, they've started become viable additions to mobile devices that already have things like powerful processors and GPS – tablets and phones.

Of course, not all LiDAR systems are created equal. Until fairly recently, the most common types built 3D maps of their environments by physically sweeping around in a similar way to a radar dish.

This obviously won't cut it on mobile devices, so newer LiDAR systems – including the 3D time-of-flight (ToF) sensors seen on many smartphones – are solid-state affairs with no moving parts. But what's the difference between a time-of-flight sensor and the LiDAR 'scanner' that we'll mostly likely see on the iPhone 12?

What's different about Apple's LiDAR scanner?

You might already be familiar with the time-of-flight (ToF) sensors seen on many Android phones – these help them sense scene depth and mimic the bokeh effects of larger cameras.

But the LiDAR system used in the iPhone 12 Pro and iPad Pro 2020 – promises to go beyond this. That's because it's a LiDAR scanner, rather than the 'scannerless' systems seen on smartphones so far.

The latter use a single pulse of infra-red light to create their 3D maps, but a scanning LiDAR system fires a train of laser pulses at different parts of a scene over a short period of time.

This brings two main benefits – an improved range of up to five meters and better object 'occlusion', which is the appearance of virtual objects disappearing behind real ones like trees.

Impressively, it's a speedy process too, but that speed is only really possible with the latest mobile processors.

As Apple stated at the iPad Pro 2020 launch, the LiDAR scanner's data is crunched together with data from cameras and a motion sensor, then "enhanced by computer vision algorithms on the A12Z Bionic for a more detailed understanding of the scene". In other words, there's a lot going on to make it appear seamless.

But while the iPhone 12's A14 Bionic processor offers good support for Apple's LiDAR scanner, there's plenty of room for improvement in the scanner itself too.

As a blog post from the developer of Halide camera app points out, right now the iPad Pro's depth data just doesn't offer the resolution needed for some applications, like detailed 3D scanning or even Portrait mode.

This means the iPad Pro's LiDAR scanner is designed more for room-scale applications like games or shifting around AR furniture in IKEA's Place app. It doesn't currently let you 3D scan objects with greater accuracy than other techniques like photogrammetry, which instead combines high-resolution RGB photos taken from different vantage points.

Wouldn't it be great if these LiDAR scanner meshes could be combined with the kind of resolution and textures seen by RGB cameras or Face ID? That's the ideal, but we're not quite there yet – we've not got the full, in-depth look at the iPhone 12 Pro, but we're not sure it can do it either.

So what exactly might you be able to do with a LiDAR scanner on the iPhone 12?

What might a LiDAR scanner let you do on the iPhone 12?

So now we know the iPad Pro's LiDAR scanner works best at room-sized scales, what kind of things could it do on the iPhone 12? For the average person, the main two are AR gaming and AR shopping.

Apple has previewed a few LiDAR-specific applications that are conveniently coming "later this year" (conveniently after the iPhone 12 launches) and one of the more interesting is the game Hot Lava.

A first-person adventure game for iOS and PC, Hot Lava will have a new 'AR mode' in late 2020 that draws on Apple's LiDAR sensor to bring its molten rivers into your living room.

So far, the demo isn't quite as impressive as we'd hoped – most of the objects that your character leaps around are in-game renders rather than your actual furniture, but there's still time for it to develop.

Naturally, any mention of AR gaming brings to mind Pokemon Go, the only real smash hit for augmented reality so far. Interestingly, the game's maker Niantic seems to be forging its own AR path, rather than relying on Apple's tech. It recently announced a new 'reality blending' feature for Pokemon Go – which lets characters realistically hide behind real-world objects like trees – and revealed the acquisition of a 3D spatial mapping company called 6D.ai.

This shows that next-gen AR gaming won't necessarily be tied to Apple's LiDAR-based tech or ARKit platform, but the iPhone 12 should at least give you a ringside seat for watching the AR battle play out.

But ultimately, the arrival of LiDAR on the iPhone 12 Pro is going to massively increase the amount of apps that use this technology - and that could be a game-changer for the iPhone camera.

But what about non-gaming experiences for the LiDAR sensor? So far, the most polished seem to be based around interior design. For example, the IKEA Place app lets you move around virtual furniture in your living room, like you're in a real-life version of The Sims.

But while the iPhone 12 Pro's improved AR placement and occlusion (or ability to hide virtual objects behind real ones) are helpful, it's still not a scintillating new use for the LiDAR scanner.

Still, while the tech is currently more useful for CAD designers and healthcare professionals (if you have an iPad Pro, check out the impressive Complete Anatomy app), there is still plenty of room for creativity and surprises to appear in the next year.

As Halide's proof-of-concept app Esper shows, the LiDAR sensor could help app developers invent new creative forms that go way beyond traditional photography and video.

In the meantime, it's fair to say that the LiDAR scanner on the iPad Pro and iPhone 12 Pro will initially be there to wow developers rather than tech fans.

You'll get the chance to test-drive the future on LiDAR-equipped devices – but the real leap should come when these sensors and apps arrive on the Apple Glasses.

Mark is TechRadar's Senior news editor. Having worked in tech journalism for a ludicrous 17 years, Mark is now attempting to break the world record for the number of camera bags hoarded by one person. He was previously Cameras Editor at both TechRadar and Trusted Reviews, Acting editor on Stuff.tv, as well as Features editor and Reviews editor on Stuff magazine. As a freelancer, he's contributed to titles including The Sunday Times, FourFourTwo and Arena. And in a former life, he also won The Daily Telegraph's Young Sportswriter of the Year. But that was before he discovered the strange joys of getting up at 4am for a photo shoot in London's Square Mile.