Can computers tell how you're feeling?

New Microsoft tools make it easier to build apps that can understand us

Speaker recognition

Two audio services will be available later in the year. The new speaker recognition API will be able to work out who's talking – not just to tell people apart in an audio track, but to recognise them specifically, based on a speech model built from existing recordings. "People can enrol their voice – we let them say a phrase and build a speech model from that, then when you send audio from them we can say 'this is Ryan, or that is Mary'."

That's the equivalent of the face verification API for speech, he explains. "That tells you with two images, what's the likelihood that this is the same face in both of them. Here we can say, given this audio file and this historical audio file, what's the likelihood it's the same person speaking."

A voice is unique and apps could use it instead of a password in some situations, he suggests. "It's not as secure as chip and pin, but it's useful for apps that only need lighter authentication."

Background noise

And Custom Recognition Intelligent Services – CRIS for short – learns the acoustics of difficult environments, or the speaking style of people whose speech is currently harder to recognise, to make voice recognition more accurate.

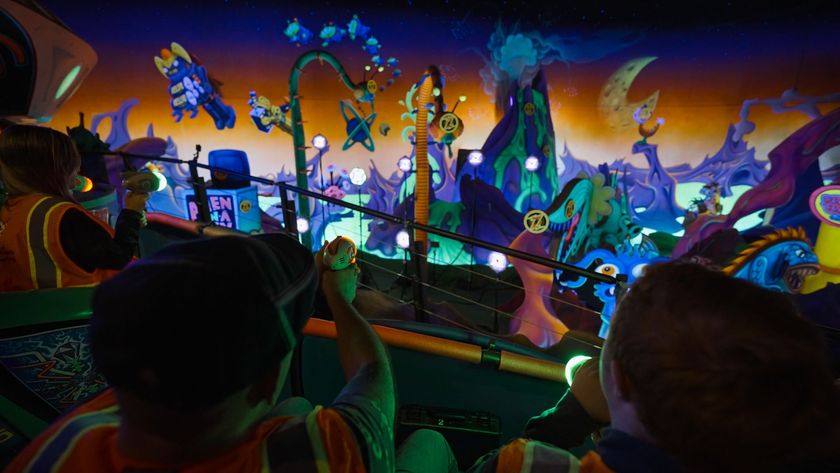

"Right now, the speech APIs don't do a great job with kids' voices or with elderly folks or people who speak English as a second language," he explains. "They've mainly been trained with people working in an office and in an acoustic model of somewhere like a conference room. If you're at a kiosk at an airport or a baseball stadium, or you've got a mascot at a sports event and you want the system to be able to hear users and talk back to them in some way – the acoustic environment is very challenging at a sports game. There's a lot of background noise, there might be echoing."

It takes five or ten minutes of audio, and that takes ten or twenty minutes to process, so you can't yet do it in real-time, but CRIS can significantly improve the accuracy of the recognition.

The system can also build a model of how people speak from a couple of sample sentences, and you can add labelled phrases for unusual words – Galgon notes, "If you have player names or specific sports terms that a default recogniser isn't going to recognise."

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

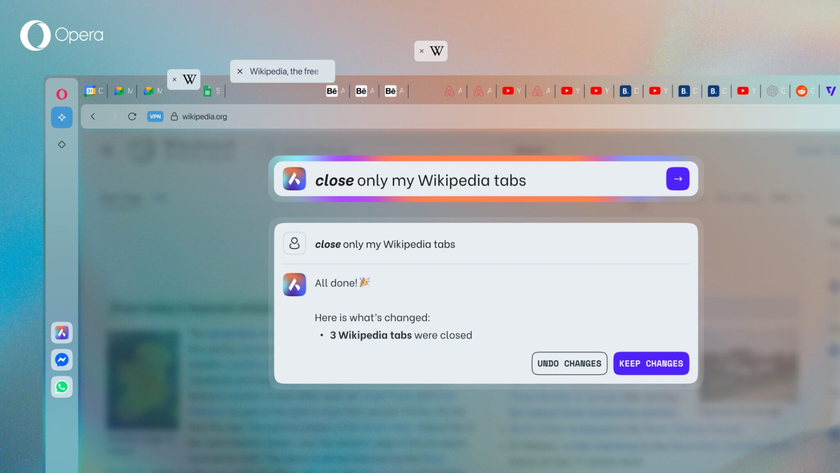

And crucially, it's not difficult to use. "That's been a complex task that's required a lot of expertise in the past. Pretty much anyone can do this."

Ease of use

Making these powerful AI features easy enough for developers to use with a couple of lines of code is what Galgon thinks is really different about Project Oxford (which remains free while it's in preview, although some of the features are now included in the Cortana Analytics Suite, so businesses can use them to recognise customers using face verification or analyse sentiment in customer feedback on their website).

"We're going to keep expanding the portfolio and the set of APIs over time. But we've focused on making it as easy as possible for developers to use, regardless of what platform they use – you can use this for any OS, any website. People without any experience of AI could make software understand what someone was saying."

In time, he thinks we'll just expect software that has these kind of smarts built in. "These are things that are human and natural to do. Our apps and our software should be able to hear and understand the world around them."

Mary (Twitter, Google+, website) started her career at Future Publishing, saw the AOL meltdown first hand the first time around when she ran the AOL UK computing channel, and she's been a freelance tech writer for over a decade. She's used every version of Windows and Office released, and every smartphone too, but she's still looking for the perfect tablet. Yes, she really does have USB earrings.