How businesses can best extract value from data in 2015

Companies can gain a serious competitive advantage via data

There is more data available today than ever – that's a fact. This can all be put to work to help drive sales, save costs, create loyal customers or improve efficiency so that greater value can ultimately be built into a brand. This part of the story is of course nothing new. But what isn't explored so often is the wider business plan that needs to be put into place in order to fully leverage such growing streams of data.

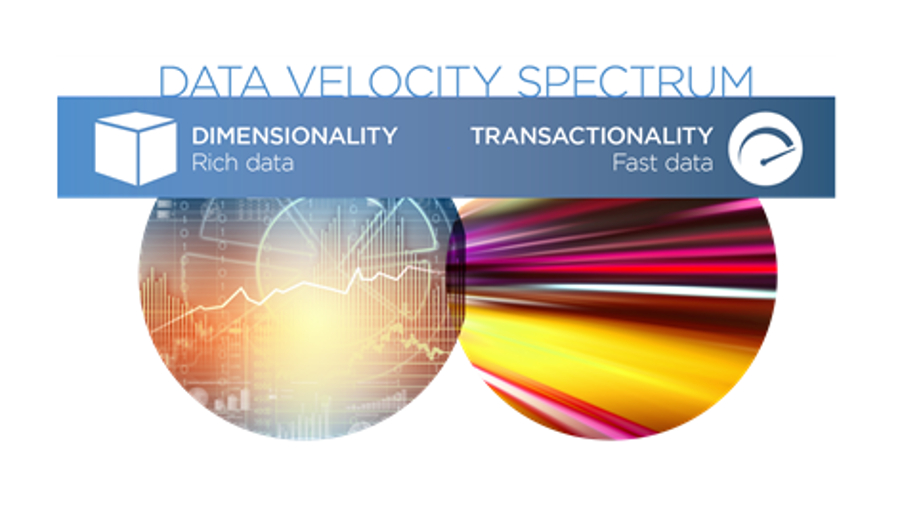

To really succeed, an organisation should understand how quickly it must put data to work. What is more important to your business for example, getting data quickly, or defining it richly for broad analytic use? Enter the New Data Velocity Spectrum, which recognises that some data needs to be put to work instantly, coming to be known as 'fast data,' while other data needs curation time, rich definition and dimension in order to unlock its value.

There can be no escaping the fact that those who put data to best use in 2015 stand to gain a serious competitive advantage. To help make things easier and to recognise the options, here are four resolutions that should be kept in mind when it comes to data this year.

1. Recognise how fast data really needs to be put to work

Understanding just how fast data needs to be put to work is an important first step. For any business, no matter its size, a distinction must be made between speed and richness of definition. Do you require data in real-time, or is it superior to have a more detailed analysis with a delay?

If the primary goal is about getting access to real-time data, it is important that the information architecture reflects this need for speed. If, on the other hand, the primary goal is about accessing rich data that can be used flexibly across a variety of analytic uses, the primary value comes from the "dimension-ality" of the data. Tacitly recognising the problems to be solved and the business needs are crucial, as only then can the correct infrastructure be put into place to match business needs.

2. Embrace new technologies to access 'fast data'

Organisations must also understand the different modern technologies available to them today. To better address our need for 'fast data', modern streaming technologies are now commonly supplementing modern database tools. These help to deliver constant, immediate access to data feeds from any data source or type. Technologies such as Apache Storm, Amazon's AWS Kinesis, and TIBCO's Streambase provide immediate access and processing of data feeds from nearly any data source or type.

Today, streaming data feeds power both transactional and analytic uses of data, enabling relevant rules to be established where real-time results can be achieved. This all helps to trigger insight that leads to fraud detection, security monitoring, service route optimisation, and trade-clearing all around the world.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

3. Appreciate that data comes in a wide variety of forms

Because variety in data is now the norm, Hadoop is commonly becoming the modern data warehouse, increasingly used to better address our need for so many new, high-volume, multi-structured data types. Rather than speed, this is all about the 'dimension-ality' of data. It is encouraging to note that solving for rich definition and dimensions in data has never been easier or less expensive.

As another example, the combination of a massively parallel analytic database (Vertica, Netezza, Greenplum) and a modern, in-memory-based business analytics platform (TIBCO Jaspersoft, TIBCO Spotfire) now often replace (or surpass) most of the functionality of traditional OLAP technologies at a fraction of the overall time and cost.

4. Put sensors and software into action

Every day, we see more powerful examples of the Internet of Things being put into action. Within TIBCO Analytics, we prefer to describe it as "sensors + software" because these terms better symbolise the grittier, more real-world value that can be delivered by measuring, monitoring and better managing vast amounts of sensor-generated data.

This is growing increasingly important today with sensor technology becoming remarkably low cost (an RFID tag, for instance, can be purchased for under £1 today). Sensor-based data is becoming increasingly well suited for correlation analysis, rather than looking strictly for causation, something that increases the potential for finding value among this machine-generated data. It appears that a quiet revolution is already underway and sensors and software are already changing the world around us.

Over the course of this year, matching the right information architecture with the best available technology that suits the specific business need will be an increasing challenge. The good news is that special purpose technologies are fast arriving to address the growing needs across The New Data Velocity Spectrum, something that many stand to benefit from if the correct infrastructure is put in place.

- Brian Gentile is senior vice president and general manager at TIBCO Analytics