How Microsoft beat Google at understanding images with machine learning

And how Redmond beat everyone else, for that matter…

Elon Musk is only the latest investor in artificial intelligence, helping to fund a big-name roster of researchers who promise to change the field. Meanwhile, Microsoft Research is actually doing it, by combining the popular deep networks that everyone from Google to Facebook is also using for machine learning with other mathematical techniques, and beating them all in the latest round of the annual ImageNet image recognition competition.

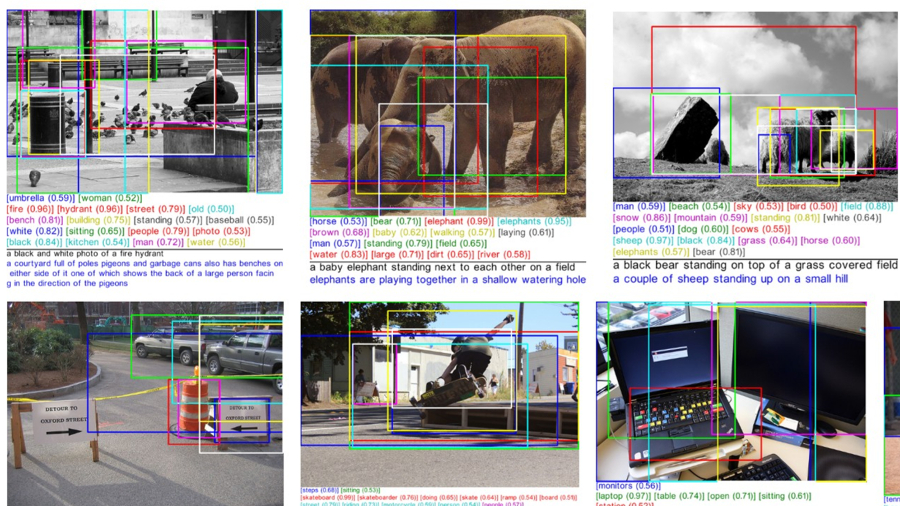

ImageNet tests how well computers can recognise which of 1,000 different categories the 100,000 test images belong in, and where in the photo the object being recognised is.

Microsoft's new neural network is as good as the other networks at spotting what's in the photo (which is often better than an untrained human at telling the difference between two very similar breeds of dog, which is one of the tests), but it's twice as good at working out where in the photo it needs to look.

Deeper still

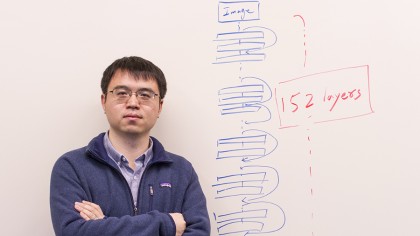

Everyone is trying to make image recognition more accurate by using deeper networks – last year, the team was working with neural networks that had 20 layers but this year they used a network with 152 layers.

"We call this extremely deep neural network 'ultra-deep learning'," Microsoft researcher Jian Sun told techradar pro. All 152 layers of the network are in a single computer, with an eight-GPU Nvidia graphics card. "The network has 152 layers because of the limitations of current GPU resources," Sun told us, adding that "we're very optimistic about advances in GPUs so that we can have an even deeper network".

The problem is, it's hard to train very deep networks because the feedback (telling the system when it's right or wrong) gets lost as it goes through the layers.

"The way these things learn is you feed data into the lowest layers of the network, the signals propagate to the top layer and then you provide feedback as to whether the learning was good or not," Peter Lee, head of Microsoft Research explained to us.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

"It's very much like rote learning in school. You try to solve a problem and you're told by the teacher if your answer was good or bad and if it was good, there's reinforcement on that answer. The reinforcement signal is sent back down through the layers. The problems has been, those signals would get exceedingly weak after just a few layers, so you don't get any correction into the lower layers. It's been a huge limiting factor."

Ideally, says Lee, instead of just going up and down the layers one at a time, you'd have connections between all of them to propagate the training signal back into the deeper layers of the network. "Imagine, instead of having a 152-layer cake, every one of these neural networks could tightly interconnect with all the others, so the back-propagation signal could flow to every layer."

That's not feasible, unfortunately – as Lee explains: "We don't know how to organise that kind of interconnected network in a small number of GPUs."

Skipping several layers

Instead, the idea the team came up with was "to organise the layers so that instead of flowing through every layer, the signals can skip several layers to get to the lower layers in the network – so if you're at layer 150, the back-propagation can skip directly to layer 147."

By skipping two or three layers of data a time, "we managed to have more signals connecting to more layers, but still fit into a small number of GPUs," Lee told us.

It took two weeks to train the 152-layer network. That might seem slow, but "philosophically, teaching a system to be able to see at human-quality levels in a few weeks still feels pretty fast," Lee enthuses.

He's also excited by how generic the lower layers of the network turned out to be – after they'd been trained to recognise what was in the image, the lower layers of the network could already find where in the photo the object was. The next step is to see if they can also work for other computer vision tasks like face recognition without retraining, which would make the system even more powerful.

Mary (Twitter, Google+, website) started her career at Future Publishing, saw the AOL meltdown first hand the first time around when she ran the AOL UK computing channel, and she's been a freelance tech writer for over a decade. She's used every version of Windows and Office released, and every smartphone too, but she's still looking for the perfect tablet. Yes, she really does have USB earrings.