Robots and humans see the world differently – but we don't know why

Yet still arrive at the same answers

A few years back, artificial intelligence reached the point at which it could recognise objects in images and answer questions about them. It's the technology that powers Google Photos and other similar products.

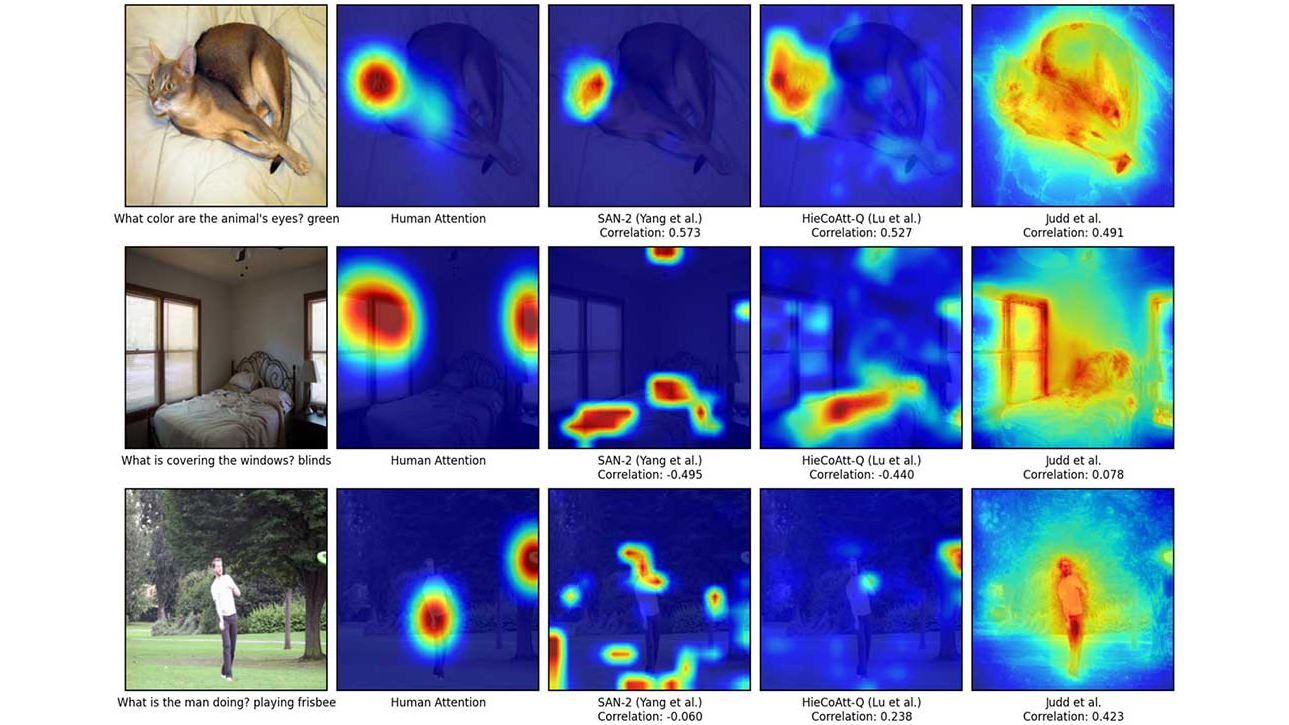

But it turns out that when an AI looks at an picture, it sees totally different things to humans. In experiments conducted at Facebook and Virginia Tech, researchers found significant differences between what humans and computers looked at when asked a simple question about an image.

Lawrence Zitnick and a team of computer vision experts first asked human workers on Amazon's Mechanical Turk platform to answer basic questions about a photo.

The photo began blurred, but the worker could click around to sharpen it in different areas. Those clicks were taken to indicate where the humans were paying attention to.

Then the same question was asked of two different neural networks which had been trained to interpret images. They also chose different points in the picture to sharpen to get more detail, and that data was again mapped to figure out which parts of the image they were interested in.

No Overlap At All

When the clicks from two humans were compared, they scored an average of 0.63 on a scale where 1 indicates total overlap in clicks and -1 indicates no overlap at all. But when clicks from a human were compared with clicks from a neural network, the overlap score was just 0.26.

Yet the neural networks still turned out to be pretty good at getting the answers to the questions right. Which raises the question of how they knew.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

"Machines do not seem to be looking at the same regions as humans, which suggests that we do not understand what they are basing their decisions on," Dhruv Batra from Virginia Tech told New Scientist.

It's hoped that the results of the experiment could improve image recognition techniques in the future. "Can we make them more human-like, and will that translate to higher accuracy?" added Batra.

The details of the experiment were published in the journal Computer Vision and Pattern Recognition.

- Duncan Geere is TechRadar's science writer. Every day he finds the most interesting science news and explains why you should care. You can read more of his stories here, and you can find him on Twitter under the handle @duncangeere.