Google's new photo technology is creepy as hell – and seriously problematic

Opinion: Not everything needs machine learning

Google has a massive machine learning operation, so it's no surprise that the company is applying it to every bit of data we give it – but there is something about messing with our photos that comes across as transgressive, no matter how bright a smile Google tries to put on it.

There were a lot of things about today's Google I/O 2021 keynote that struck a weird note, like its celebrating its "AI Principles" after its treatment of its former lead AI ethicist, Dr. Timnit Gebru, prompting the company to direct its scientists to "be more positive" about AI.

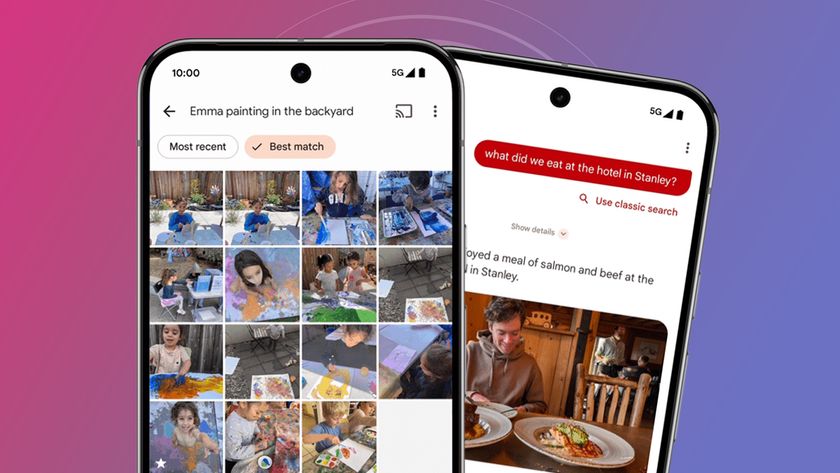

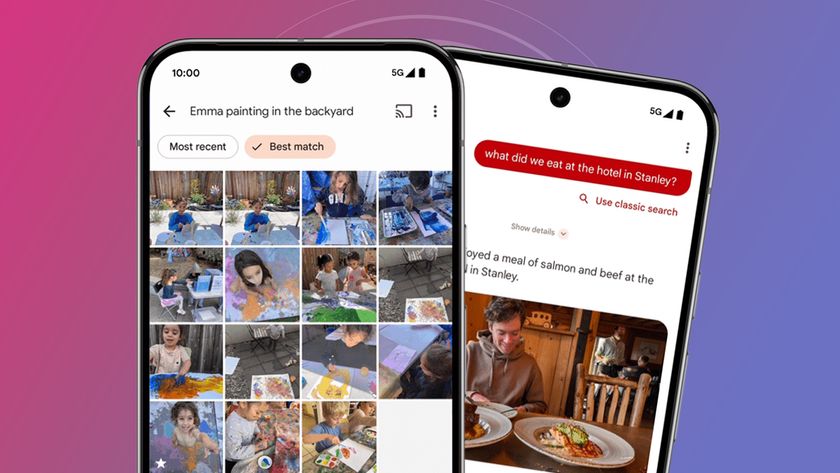

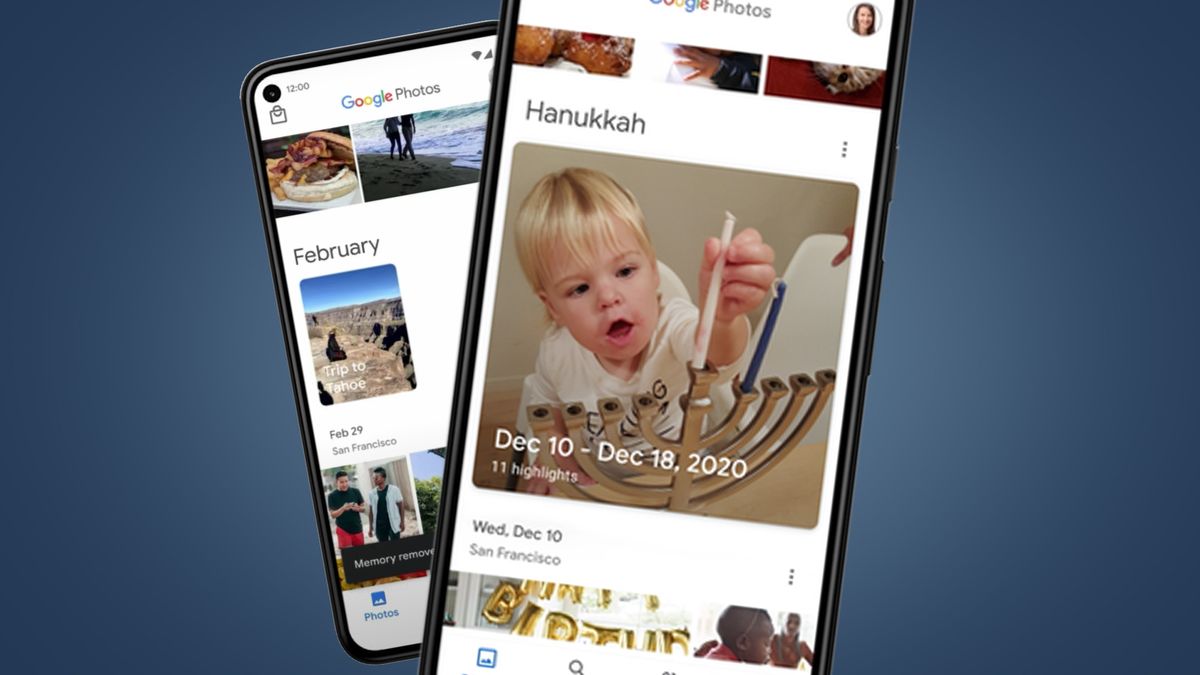

But it was the part of the keynote given by Shimrit Ben-Yair, head of Google Photos, that felt particularly unnerving. During her presentation, she showcased how Google's machine learning technology was able to analyze all of your photos, identify less-recognized similarities across your entire photo collection, and collate your photos into groups accordingly.

Which is to say that Google is running every photo you give it through some very specific machine learning algorithms and identifying very specific details about your life, like the fact that you like to travel around the world with a specific orange backpack, for example.

Fortunately, Google at least acknowledges that this could be problematic if, say, you are transgender and Google's algorithm decides that it wants to create a collection featuring photos of you that include those that don't align with your gender identity. Google understands that this could be painful for you, so you totally have the option of removing the offending photo from collections going forward. Or, you can tell it to remove any photos taken on a specific date that might be painful, like the day a loved one died.

All of which is better than not having that option at all, but the onus is still on you, the user, which is always, always the case. What's a little bit of pain for some to endure when Google has new features to roll out that no-one was even asking for?

- Google Maps is getting five major upgrades - including useful pandemic feature

- Samsung Galaxy Watch 4 Wear OS rumors get huge new hint from Google itself

- Wear OS is finally getting a new update that might improve its smartwatches

Then, we got to the part of the presentation where Google would take a selection of two or three photos taken together, like when you're taking lots of photos in a row to capture one where no one is blinking in a group photo, and apply machine learning to them to generate a small "Cinematic Photo" with them.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

This feature, first introduced in December 2020, will use machine learning to insert entirely fabricated frames in between those photos to essentially generate a GIF, recreating a live event in a way that is a facsimile of the event as it happened. Heavy emphasis on facsimile.

Google presents this as helping you reminisce over old photos, but that's not what this is – it's the beginning of the end of reminiscence as we know it. Why rely on your memory when Google can just generate one for you? Never mind the fact that it is creating a record of something that didn't actually happen and effectively presenting it to you as if it did.

Sure, your eyes may have blinked "something like that" between those two photos and it's not like Google is making you do lines of coke at a party when you did not, in fact, do such a thing. But when dealing with what is ethical and what is not, there isn't any room for "kinda". These are the kinds of choices that lead down paths we don't want to go down, and every step down a path makes it harder for us to turn back.

If there's one thing we really, really should have learned in the past decade, its not to put such blind trust in machine learning algorithms that have the power to warp our perception of reality. QAnon is as much a product of machine learning as Netflix is, but now we're going to put our photo albums on the altar of AI and call whatever comes from it "Memories." All while expending an enormous amount of actual energy running all these algorithms in data centers as climate change marches ever onward.

With every new advance in Google's machine learning platform, it becomes more and more apparent that it really should listen to what ethicists like Dr. Gebru are trying to tell it – and it's all the more evident that it has no interest in doing so.

- Stay up to date on all the latest tech news with the TechRadar newsletter

John (He/Him) is the Components Editor here at TechRadar and he is also a programmer, gamer, activist, and Brooklyn College alum currently living in Brooklyn, NY.

Named by the CTA as a CES 2020 Media Trailblazer for his science and technology reporting, John specializes in all areas of computer science, including industry news, hardware reviews, PC gaming, as well as general science writing and the social impact of the tech industry.

You can find him online on Bluesky @johnloeffler.bsky.social