ChatGPT is an existential crisis for Microsoft and Google, but not in the way they think

When platforms become publishers, all bets are legally off

Microsoft just unveiled its newest AI integration into its broader ecosystem of products, from Bing search to Office and even possibly Windows itself: a ChatGPT-like AI system to interact with users through a large language model that the company says will greatly enhance search results and general user experience using its products.

That may be true in 99.9999% of cases, but it's always the edge cases that get you in trouble, and failures of a system that is effectively a black box are inevitable. That Google and Microsoft are banking so hard on this idea is astounding since they have a unique privilege on the internet that the rest of us don't.

With the integration of these new AI tools, however, Google, Microsoft, and others are pretty much throwing that protection away, and as such, they could, and should, be held legally liable for every bit of misinformation and libel that their new chatbots produce.

Laying the groundwork for endless litigation

Ask any YouTube creator or print or online journalist about copyright and you're bound to get an earful about the difference between fair use, quoted materials, "background", and all the other rules governing what they can publish and how they can publish it.

If you're livestreaming Hi-Fi Rush and forget to turn on the Livestream audio option? Enjoy the copyright strike on your account. Forgot to attribute an entire passage of text in your article to the original source? Have fun navigating a writing career after the ensuing plagiarism controversy (and be ready to forgo any profits you've made if the case is egregious enough).

What happens when a black-box AI "inadvertantly" plagiarizes your material and Google or Microsoft publishes it for profit? Or, what if it scrapes bad advice from a site or rewords the material it has scraped but omits critical, legally required disclaimers? What happens if it does it thousands or millions of times? The whole point of these AI chatbots is that they are autonomous, so there's little to no chance to intervene before the chatbot says something legally problematic.

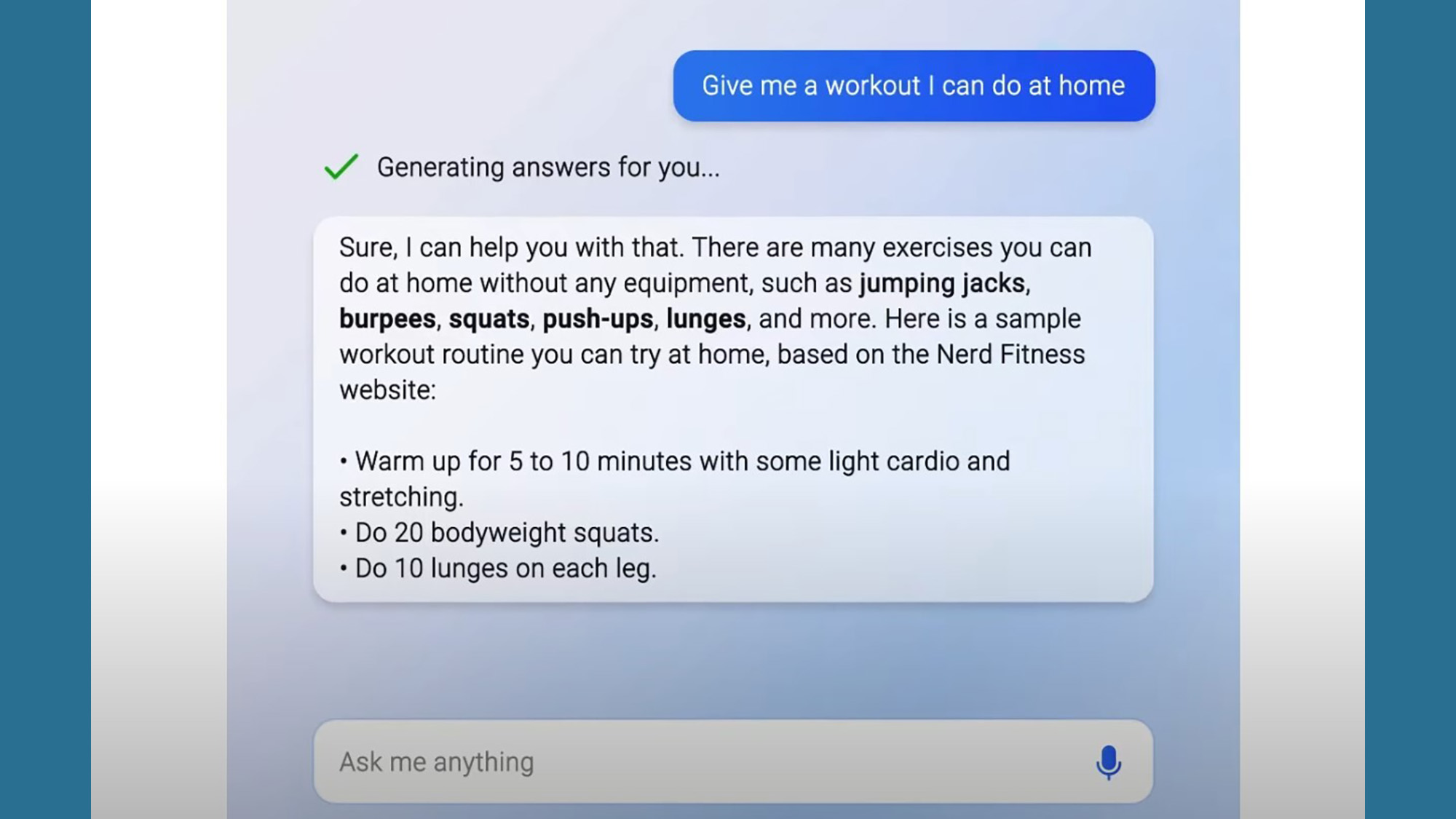

Even in Microsoft's own press materials, it included a screenshot of the new Bing Chat feature where the prompt "Give me a workout I can do at home" gave an answer without any medical disclaimer or context about the serious risk of injury when performing some of the exercises the answer suggests.

This is the kind of thing that personal injury lawyers make a living off of, and these chatbots being deployed by the most wealthy corporations in the world are going to be an absolutely irresistible target.

To its credit, Microsoft Bing's AI-powered chatbots will at least provide citations for where it got the material it used to generate its answers, but as a journalist, I can tell you that "Well, this is where I got the legally objectionable material from" won't get you very far in a court.

When platforms become publishers

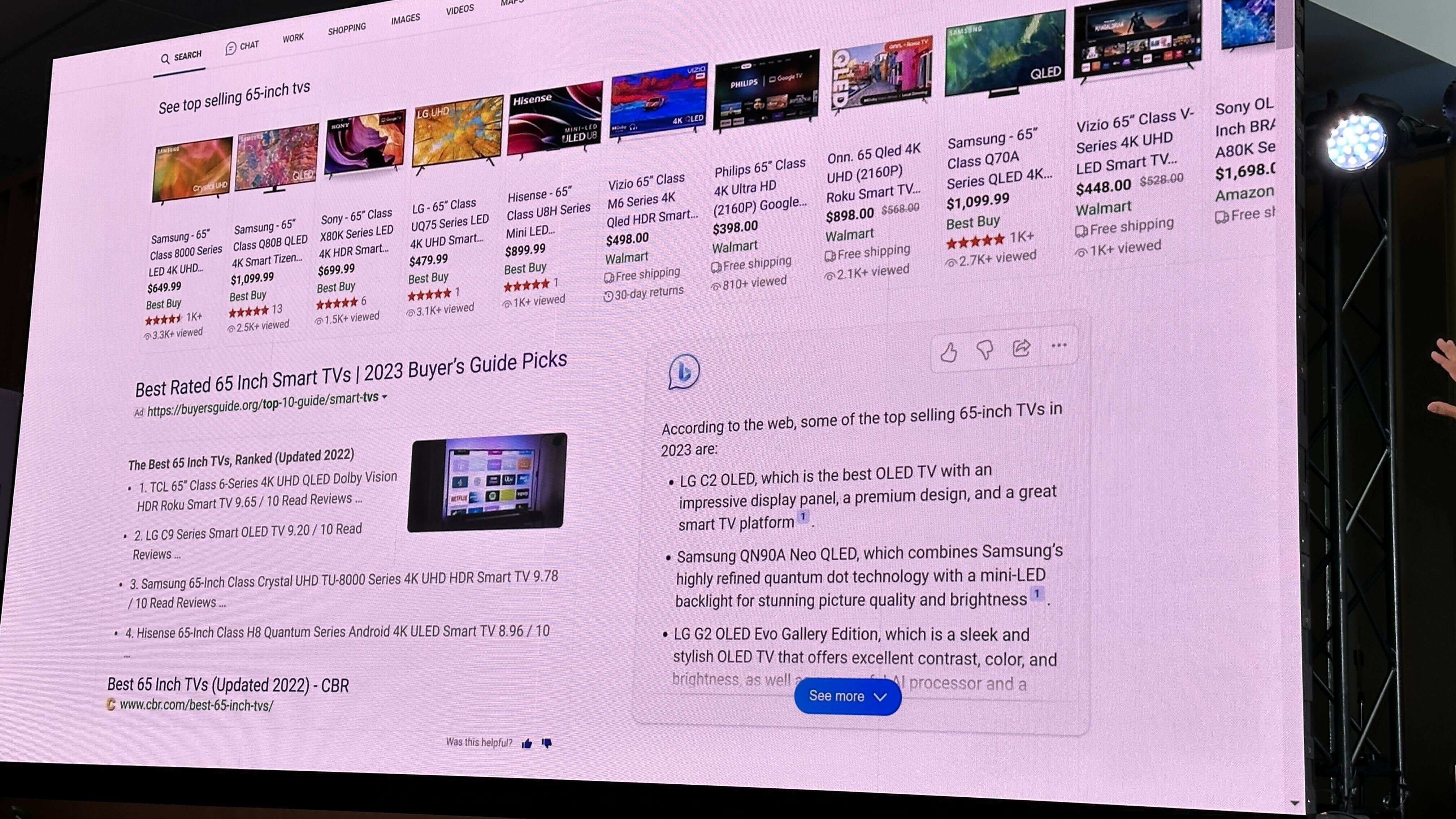

What the new Bing produces will only ever be as good as the data it uses as input, and by rewriting it in Chat rather than simply passing along what someone else has written (as Google and Microsoft do now with their search results), they ultimately become the publishers of that content, even if they cite someone else's work as a source.

You can introduce the most advanced filters possible to minimize your risk, but at some point, one of these chatbots is going to respond to a very specific prompt and pull from some very dubious source and it's going to give a result that is going to break a law or even cause harm. Who is responsible then?

If Microsoft Bing recommends a substitute for eggs in a recipe (as Microsoft itself says the new Bing will be able to do in its presentation), and recommends an ingredient to somebody who has an allergy, who is to blame if that person gets sick or, God forbid, actually dies as a result? You can bet there will be an army of lawyers who are going to sue to settle that legal question very quickly, and Microsoft will have a lot harder time hiding and claiming a "platform" exemption when its own chatbot produced the offending work.

Worse still, no one at Microsoft will be able to predict when something like this will happen and what the offending material will be, so there's no way to prevent it. That's because, fundamentally, nobody knows how these generative AI models actually come to the conclusions they ultimately reach, since neural networks are, again, largely black boxes. You cannot predict every problem, you can only identify it after the fact, which won't be a great legal defense for Big Tech firms who implement these models in a public-facing way.

They are ultimately integrating an unknowable liability into their products and taking responsibility for what it produces, just as TechRadar (and parent company Future PLC) would be liable if I wrote and it published something that resulted in harm or broke some country's law.

There are critical safeguards in place to ensure that doesn't happen when you're a publisher (like editors and required legal training for writers on this sort of thing), but Microsoft and Google seem to be prepared to just let their AIs loose on the Internet without any intercessional human supervision at the point of the user interface. Good luck with that.

Microsoft of all companies should know how this is likely to end up since its experimental Twitter chatbot Tay was hijacked in less than a day by internet users who turned it into a foul-mouthed bigot for fun. Sure, the state of AI has come a long way in the seven years since Tay denied the Holocaust, but honestly, it hasn't changed fundamentally. These models are just as biased and prone to manipulation as ever, they just look more sophisticated now so that their faults are better hidden.

How much of a legal shield that will be for Big Tech companies rolling out AI models online that are intrinsically capable of spreading legally damaging misinformation and libel remains to be seen, but March 2016 was also a very different era for tech than 2023, and something tells me that people nowadays are going to be far less forgiving no matter what your politics happen to be (Editor's update: this week, Alphabet livestreamed a demonstration of Google's Bard AI and showed off a response that was factually incorrect, in real time. Markets reacted by wiping $120 billion off Alphabet's market valuation in the hours that followed).

A self-inflicted existential crisis

The legal perils of being a publisher are as infinite as there are ways to libel someone or spread dangerous, unprotected speech, so it's impossible to predict how damaging AI integration will be.

All I know is Google, Microsoft, and any other Big Tech platform that decides that it's going to jump on the AI writer bandwagon better be ready to answer for whatever it inevitably spews out, and given past experience with these kinds of automated language models, once millions and even billions of people start engaging with them, all of those invisible faults in the model are going to materialize very quickly.

If there's one thing we've learned over the past few years, it's that inside every internet-trained AI model, there is a deeply bigoted, genocide-friendly troll looking for some crack to crawl out of. So, how long until it crawls out into our chat-engine results? How many of those are going to be legally actionable?

Who's to say, but in no world would I bet my trillion-dollar company's existence on something this ephemeral. Given Big Tech's absolute paranoia about missing out on the next Big Thing, though, companies jumping on this isn't the least bit surprising, even if it's Big Tech's most reckless move yet.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

John (He/Him) is the Components Editor here at TechRadar and he is also a programmer, gamer, activist, and Brooklyn College alum currently living in Brooklyn, NY.

Named by the CTA as a CES 2020 Media Trailblazer for his science and technology reporting, John specializes in all areas of computer science, including industry news, hardware reviews, PC gaming, as well as general science writing and the social impact of the tech industry.

You can find him online on Bluesky @johnloeffler.bsky.social