Nvidia is done with crypto - AI is the future, and I'm pumped

After its brief dalliance with crypto, Nvidia is going all in on AI

Nvidia recently released a research paper exploring the ways in which artificial intelligence programs could be used to help in the design and development of processors - and that has exciting implications for the future of PC hardware.

I’m going to get a bit technical here before delving into the ramifications of this research for ordinary PC gamers, so skip down to the next section if you want to jump past me waxing lyrical about the science behind this!

The paper, which was specifically investigating how machine learning techniques could be used to find better ways to lay out billions of nanometer-thick transistors on a processor die, was seeking to improve on previous research conducted by Google to help find ways to automate the chip design process - which, according to Reuters, caused a serious internal dispute at Google over the validity and ethics of the research.

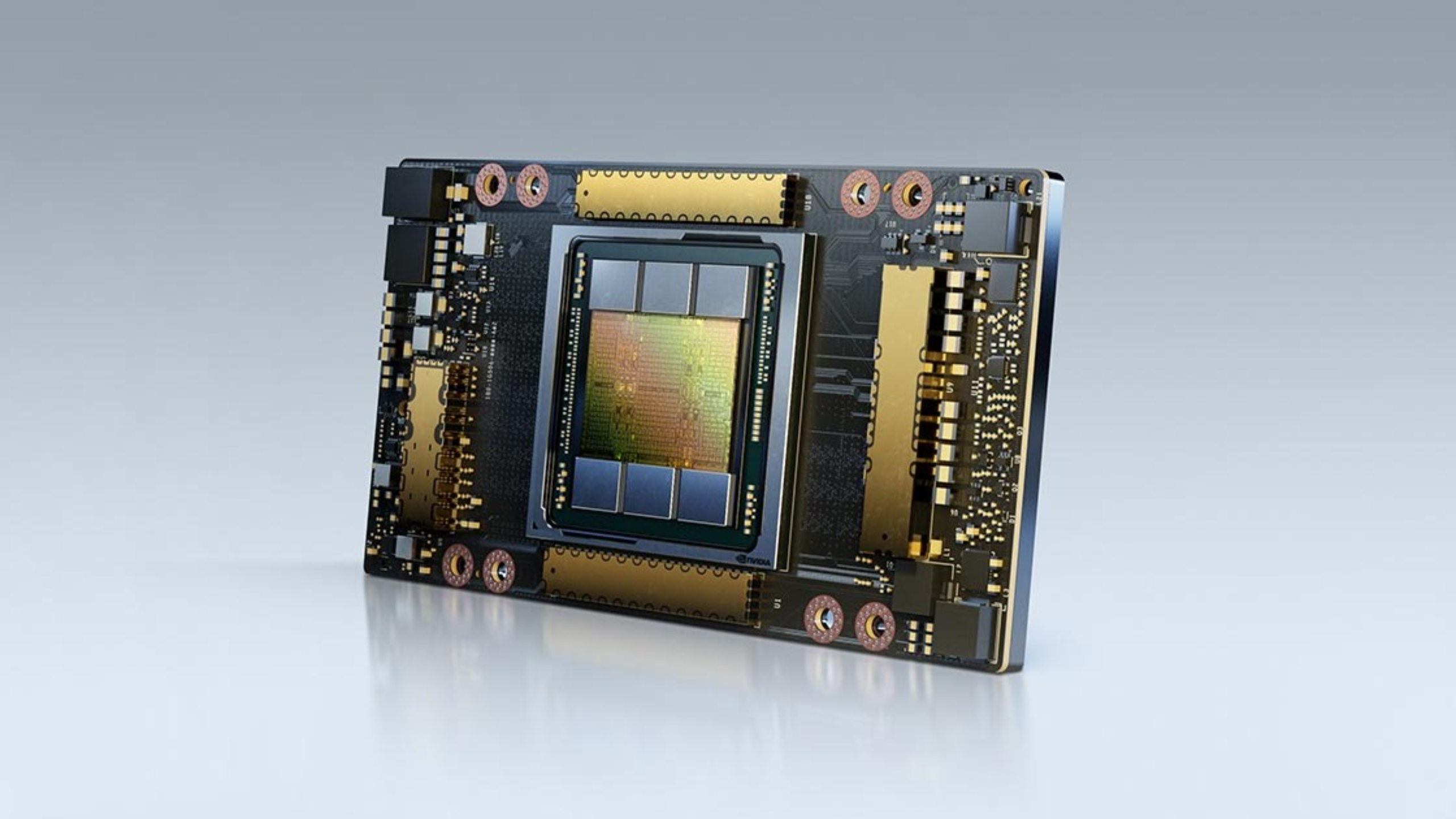

Now, that might sound fairly boring to any non-scientific types reading this, but don’t worry: I’ll break this down into simple terms. Processors (whether they’re CPUs or the GPUs developed by Nvidia) have been using increasingly more and more transistors as the technology and manufacturing techniques have advanced. The all-powerful RTX 4090, Nvidia’s current flagship graphics card, has a whopping 76,300 million transistors.

That’s a ludicrous amount, so bask in it for a moment. I’ve been building computers - both casually and professionally - for more than a decade, and it still absolutely boggles my mind how a slab of silicon measuring just a few square centimeters in size could possibly contain so many transistors. That’s a graphics chip, too; Intel’s Core i9-13900K CPU uses around 25.9 billion. That’s not a figure anyone can meaningfully visualize!

Every one of those nanoscopic transistors functions as a tiny binary on-off switch, so the more you have, the more complex operations the processor can handle. How those transistors are arrayed on the chip has a huge bearing on how well the chip performs - as well as how much it costs to make.

The future of processor design

Here’s the juicy bit: Nvidia’s research demonstrates that AI has real, genuine potential to significantly improve how the company designs its GPUs. Sure, the RTX 4000 series delivers stunning graphical performance, but they’re also seriously expensive - to the point where I’ve speculated that integrated graphics could take over completely, leaving traditional graphics cards dead in the dust.

But imagine this: a specialized AI that can design a GPU chip faster than any team of humans, which can be manufactured at a fraction of the cost and provides better performance than anything to come before it. Boom, that’s the best graphics card ever made.

This could be particularly vital to Nvidia, who has notably been the only major chip developer to completely abandon Moore’s Law and claim that processor manufacturing is set to slow over the coming years - something that AMD and Intel have vehemently denied. It’s the key reason Nvidia has given for the sky-high pricing on its latest generation of GPUs, so this could see us getting more cheap graphics cards from Team Green in the future.

Nvidia is taking AI very seriously right now, too, with the company’s Chief Technology Office Michael Kagan recently slamming cryptocurrency in an interview with The Guardian in favor of pushing AI research instead. “It collapsed, because it doesn’t bring anything useful for society. AI does,” he claimed.

The real benefits of AI

This is extremely funny to me, since Nvidia quite literally sold millions of graphics cards to miners during the 2021-2022 crypto craze and still lists a dedicated mining GPU on its own website, but sure, Mr Kagan. Crypto is useless garbage, and AI does indeed have genuine potential benefits for our society.

It looks like Nvidia is united in this view, as CEO Jensen Huang recently described the popular generative chatbot ChatGPT as an ‘iPhone moment’ for AI research. However, I have to say that I disagree with Jensen on this one: ChatGPT isn’t the iPhone of AI, but AI-designed processors could be.

Chatbots are a waste of AI! There, I said it. I’ve been dubious about the value AI chatbots bring to society, and my own personal experiments in trying to break ChatGPT only deepened my doubts. Why do we need to interact with AI? It isn’t truly intelligent; why can’t we simply talk to each other? Asking a bot to tell you a story when there are literally millions of books already out there for you to read is a fool’s errand, if you ask me.

But using machine-learning tools to design better GPUs? Now we’re talking. I’ll admit that I’ve been critical of Nvidia’s AI obsession in the past, but this feels like a more tangible, legitimate use case than anything Team Green has suggested thus far. I’m genuinely excited at the prospect. Picture this:

Nvidia’s next big presentation, pre-scripted by me!

You’re watching a livestream, where Nvidia is expected to reveal its next generation of graphics cards. Everyone is holding their breath; I’m sitting in the audience, near the front, camera in hand, waiting for the presentation to start. I’m already worried about how much this new GPU will cost.

Then BOOM, on come the spotlights. It’s blinding at first, then; a black and green backdrop behind a wide stage, and a figure; it’s Jensen! Here he comes, in the flesh this time - not that cursed Xbox 360-era avatar we saw back in 2022. He’s wearing his signature glasses and leather jacket.

Jensen approaches the mic. He taps it once. The feedback hurts my ears, but it’s edited out of the livestream. You watch as he begins to speak: “Hello,” he says. “I’m not really here.”

The Nvidia CEO dissolves; gone, reduced to atoms. He was a hologram, AI-generated in appearance and voice, using the power of his own next-gen GPUs. The true Jensen Huang takes the stage. What’s that in his hand, you wonder? I can see it more clearly: it’s a graphics card. He holds it aloft. “Hello again. It’s really me this time. You just witnessed the power of our new flagship GPU, designed by AI from the ground up - the first of its kind in the world. Ladies and gentlemen, I’m proud to present: the AITX 5090. Launching today, for just $799.”

The crowd goes wild! Jensen is carried out of the studio by a hollering swarm of tech journalists. You close the livestream, and go directly to the Nvidia website. The card is already sold out, of course, but don’t worry - TechRadar has a liveblog tracking stock at major retailers. You’ll get your AI-made GPU.

Give me a call, Nvidia - I will gladly write you a full script. I believe in you, and I believe in your AI.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Christian is TechRadar’s UK-based Computing Editor. He came to us from Maximum PC magazine, where he fell in love with computer hardware and building PCs. He was a regular fixture amongst our freelance review team before making the jump to TechRadar, and can usually be found drooling over the latest high-end graphics card or gaming laptop before looking at his bank account balance and crying.

Christian is a keen campaigner for LGBTQ+ rights and the owner of a charming rescue dog named Lucy, having adopted her after he beat cancer in 2021. She keeps him fit and healthy through a combination of face-licking and long walks, and only occasionally barks at him to demand treats when he’s trying to work from home.