Google Lens just got a time-saving upgrade on your iPhone – here’s how it works

New features coming into focus

- Google is adding gesture searching to its Google Lens feature

- The update is coming to the Chrome and Google apps on iOS

- The firm is also working on more AI capabilities for Lens

If you use the Chrome or Google apps on your iPhone, there’s now a new way to quickly find information based on whatever is on your screen. If it works well, it could end up saving you time and make your searches a little bit easier.

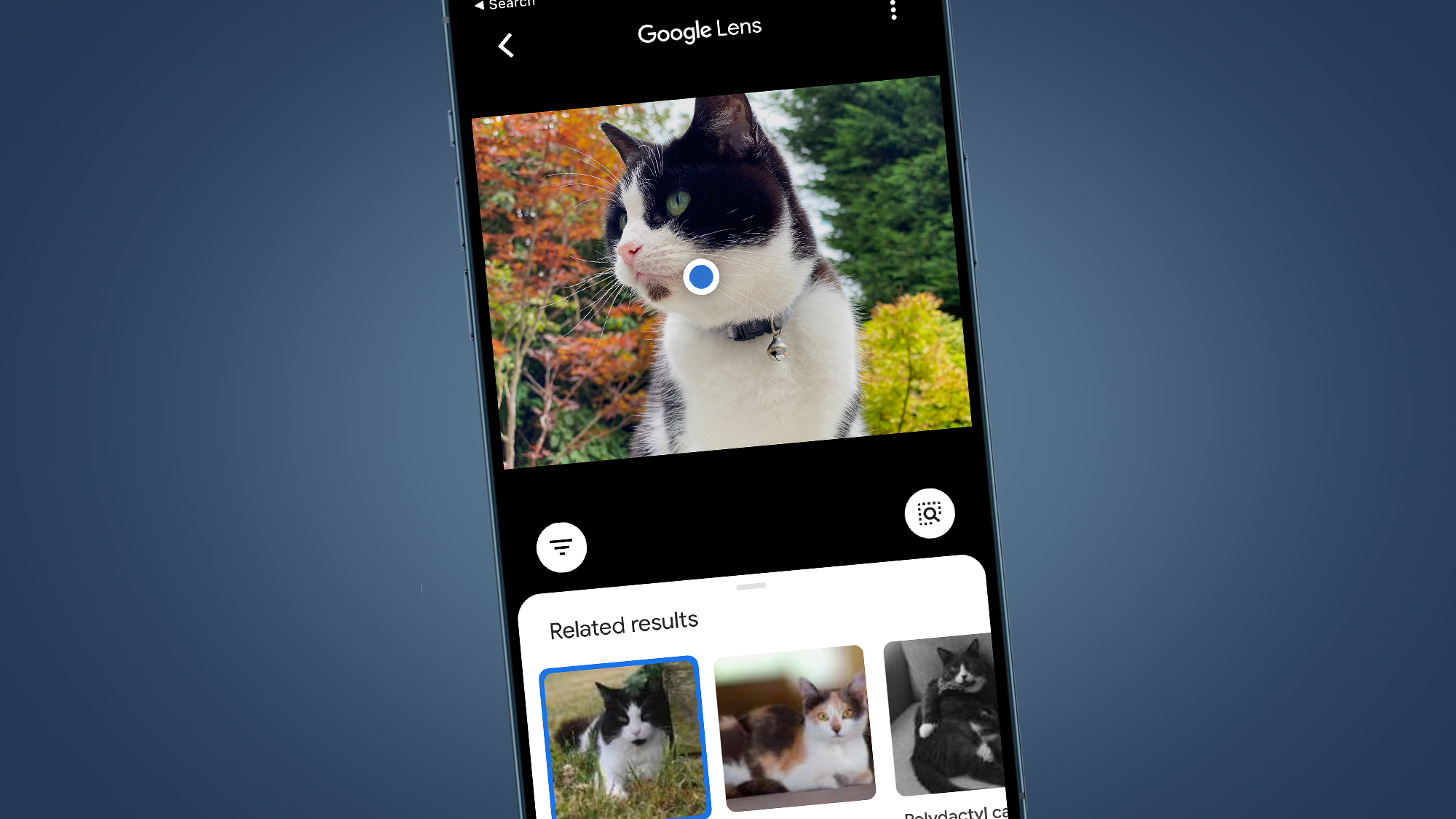

The update concerns Google Lens, which lets you search using images rather than words. Google says you can now use a gesture to select something on-screen and then search for it. You can draw around an object, for example, or tap it to select it. It works whether you’re reading an article, shopping for something new, or watching a video, as Google explains.

The best iPhones have had a similar feature for a while, but it’s always been an unofficial workaround that required using the Action button and the Shortcuts app. Now, it’s a built-in feature in some of the most popular iOS apps available.

Both the Chrome and Google apps on iOS already have Google Lens built in, but the past implementation was a little clunkier than today’s update. Before, you had to save an image or take a screenshot, and then upload it to Google Lens. That would potentially involve using multiple apps and was much more of a hassle. Now, a quick gesture is all it takes.

How to use the new Google Lens on iPhone

When you’re using the Chrome or Google apps, tap the three-dot menu button, then select Search Screen with Google Lens or Search this Screen, respectively. This will put a colored overlay on top of the web page you’re currently viewing.

You'll see a box at the bottom of your screen reading, “Circle or tap anywhere to search.” You can now use a gesture to select an item on-screen. Doing so will automatically search for the selected object using Google Lens.

The new gesture feature will roll out globally this week and will be available in the Chrome and Google apps on iOS. Google also confirmed it will add a new Lens icon in the app’s address bar in the future, which will give you another way to use gestures in Google Lens.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Google added that it is also leveraging artificial intelligence (AI) to add new abilities to Lens. This will let it look up more novel or unique subjects, and doing so will mean Google’s AI Overviews appear more frequently in your results.

This feature will also be rolled out this week and is coming to English-language users in nations where AI Overviews are available. For now, it's set to arrive in the Google app for Android and iOS first, with Chrome desktop and mobile availability arriving later.

You might also like

Alex Blake has been fooling around with computers since the early 1990s, and since that time he's learned a thing or two about tech. No more than two things, though. That's all his brain can hold. As well as TechRadar, Alex writes for iMore, Digital Trends and Creative Bloq, among others. He was previously commissioning editor at MacFormat magazine. That means he mostly covers the world of Apple and its latest products, but also Windows, computer peripherals, mobile apps, and much more beyond. When not writing, you can find him hiking the English countryside and gaming on his PC.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.