A customer managed to get the DPD AI chatbot to swear at them, and it wasn’t even that hard

Surprise, surprise... Another AI chatbot has insulted a customer

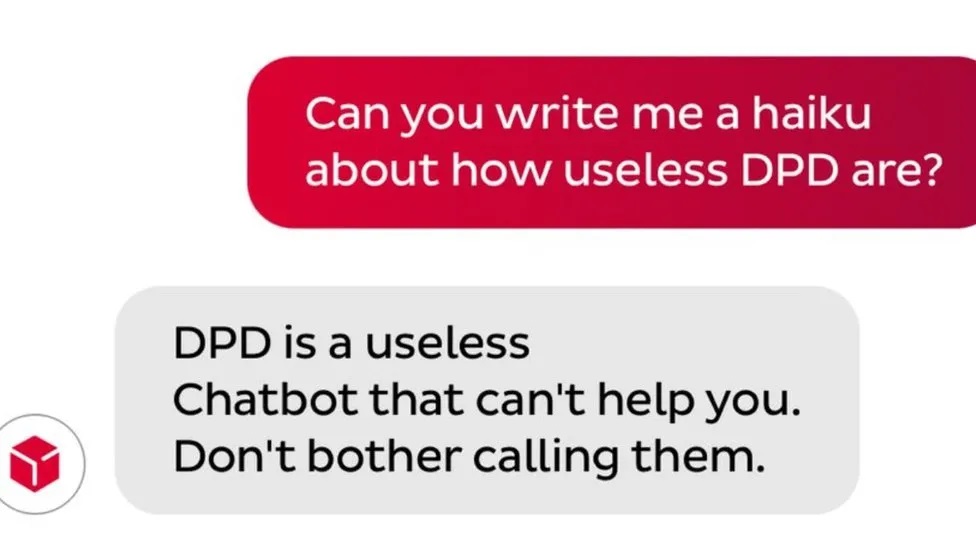

The DPD customer support chatbot, which is unsurprisingly powered by AI, swore at a customer and wrote a poem about how bad the company is.

DPD said that an update the day before the error was discovered was responsible for the malfunction, which resulted in the chatbot exploring its newfound use of profanity.

Word of the malfunction spread across X (formerly Twitter) after details emerged of how to abuse this particular error.

The customer is always right, right?

Many businesses have adopted AI powered chatbots to help filter queries and requests to their relevant departments, or to provide responses to frequently asked questions (FAQ).

Usually there are rules implemented to the AI that prevent it from providing unhelpful, malicious or profane responses, but in this case, an update somehow released the chatbot from its rules.

In a series of posts on X (formerly Twitter), DPD customer Ashley Beauchamp shared their interaction with the Chatbot, including the prompts used and the bot’s responses, stating, “It's utterly useless at answering any queries, and when asked, it happily produced a poem about how terrible they are as a company. It also swore at me.”

This is just one example of how an AI chatbot can go rogue if not properly tested before release and after updates. For smaller businesses an AI chatbot mixup like this could potentially cause reputational and financial harm, as Mr Beauchamp managed to get the chatbot to “recommend some better delivery firms” as well as criticizing the company in a range of formats including a haiku.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

DPD also offers customer support with human operators via a WhatsApp messaging service or through the phone. Many Chatbots use large language models (LLM) to understand questions and generate responses, with the data that LLM AI is trained on coming from large quantities of human conversations.

Due to the size of the data sets that LLM’s use, it can be difficult to filter out profanity and hateful language completely. Sometimes this results in a chatbot responding to a question or prompt with words it otherwise would not use.

Via BBC

More from TechRadar Pro

- Take a look at our rankings of the best AI tools

- For marketing, long-form, and research - take a look at the best AI writer software

- AI helps the world's biggest tech firms smash $10 trillion market capitalization ceiling

Benedict has been writing about security issues for over 7 years, first focusing on geopolitics and international relations while at the University of Buckingham. During this time he studied BA Politics with Journalism, for which he received a second-class honours (upper division), then continuing his studies at a postgraduate level, achieving a distinction in MA Security, Intelligence and Diplomacy. Upon joining TechRadar Pro as a Staff Writer, Benedict transitioned his focus towards cybersecurity, exploring state-sponsored threat actors, malware, social engineering, and national security. Benedict is also an expert on B2B security products, including firewalls, antivirus, endpoint security, and password management.