AI LLM provider backed by MLPerf cofounder bets barn on mature AMD Instinct MI GPU — but where are the MI300s?

Lamini wants enterprises to buy their own LLM superstation to run LLMs in virtual PCs or on-premise

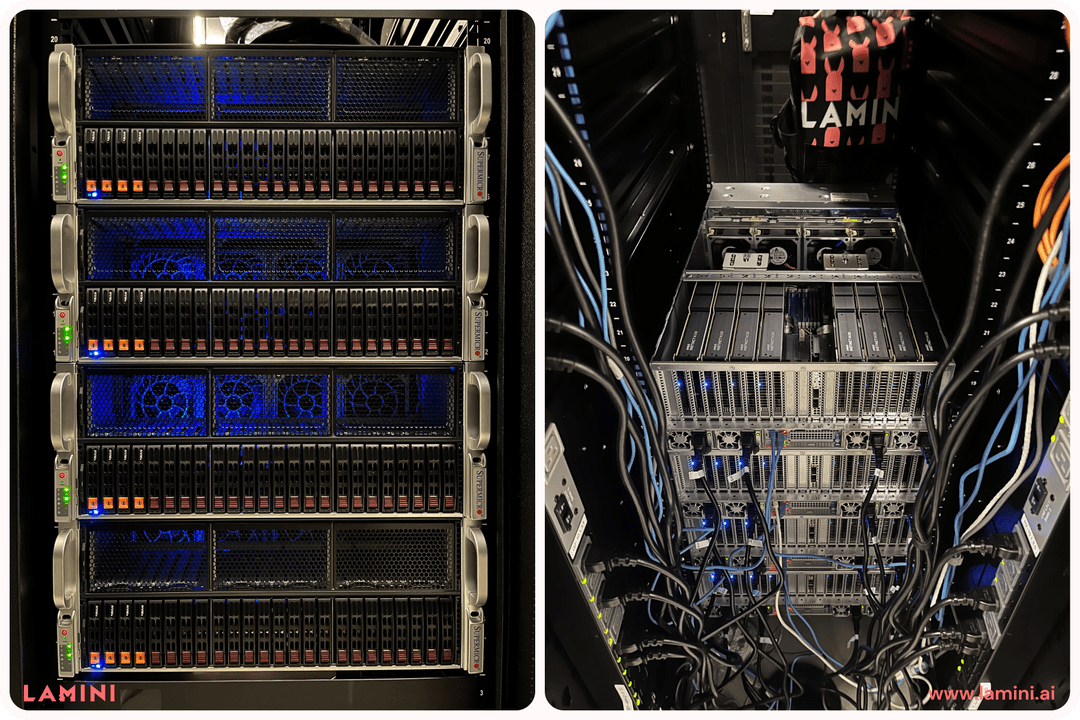

With demand for enterprise-grade large language models (LLMs) surging over the last year or so, Lamini has opened the doors to its LLM Superstation powered by AMD’s Instinct MI GPUs.

The firm claims it’s been running LLMs on over 100 AMD instinct GPUs in secret for the last year in production situations – even before ChatGPT launched. With its LLM Superstation, it’s opening the doors to more potential customers to run their models on its infrastructure.

These platforms are powered by AMD Instinct MI210 and MI250 accelerators, as opposed to the industry-leading Nvidia H100 GPUs which. By opting for its AMD GPUs, Lamini quips, businesses “can stop worrying about the 52-week lead time”.

AMD vs Nvidia GPUs for LLMs

Although Nvidia’s GPUs – including the H100 and A100 – are those most commonly in use to power LLMs such as ChatGPT, AMD’s own hardware is comparable.

For example, the Instinct MI250 offers up to 362 teraflops of computing power for AI workloads, with the MI250X pushing this do 383 teraflops. The Nvidia A100 GPU, by way of contrast, offers up to 312 teraflops of computing power, according to TechRadar Pro sister site Tom’s Hardware.

"Using Lamini software, ROCm has achieved software parity with CUDA for LLMs,” said Lamini CTO Greg Diamos, who is also the cofounder of MLPerf. “We chose the Instinct MI250 as the foundation for Lamini because it runs the biggest models that our customers demand and integrates finetuning optimizations.

“We use the large HBM capacity (128GB) on MI250 to run bigger models with lower software complexity than clusters of A100s."

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

AMD’s GPUs can, in theory, certainly compete with Nvidia’s. But the real crux is availability, with systems such as Lamini’s LLM Superstation able to offer enterprises the opportunity to take on workloads immediately.

There’s also the question mark, however, over AMD’s next-in-line GPU, the MI300. Businesses are currently able to sample the MI300A now, while the MI300X is being sampled in the coming months.

According to Tom’s Hardware, the MI300X offers up to 192GB memory, which is double the H100, although we don’t yet fully know what the compute performance looks like. Nevertheless, it’s certainly set to be comparable to the H100. What would give Lamini’s LLM Superstation a real boost is building and offering its infrastructure powered by these next-gen GPUs.

More from TechRadar Pro

Keumars Afifi-Sabet is the Technology Editor for Live Science. He has written for a variety of publications including ITPro, The Week Digital and ComputerActive. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. In his previous role, he oversaw the commissioning and publishing of long form in areas including AI, cyber security, cloud computing and digital transformation.