AMD debuts new Pensando chips and networking technology to advance AI performance

AI networking takes a leap

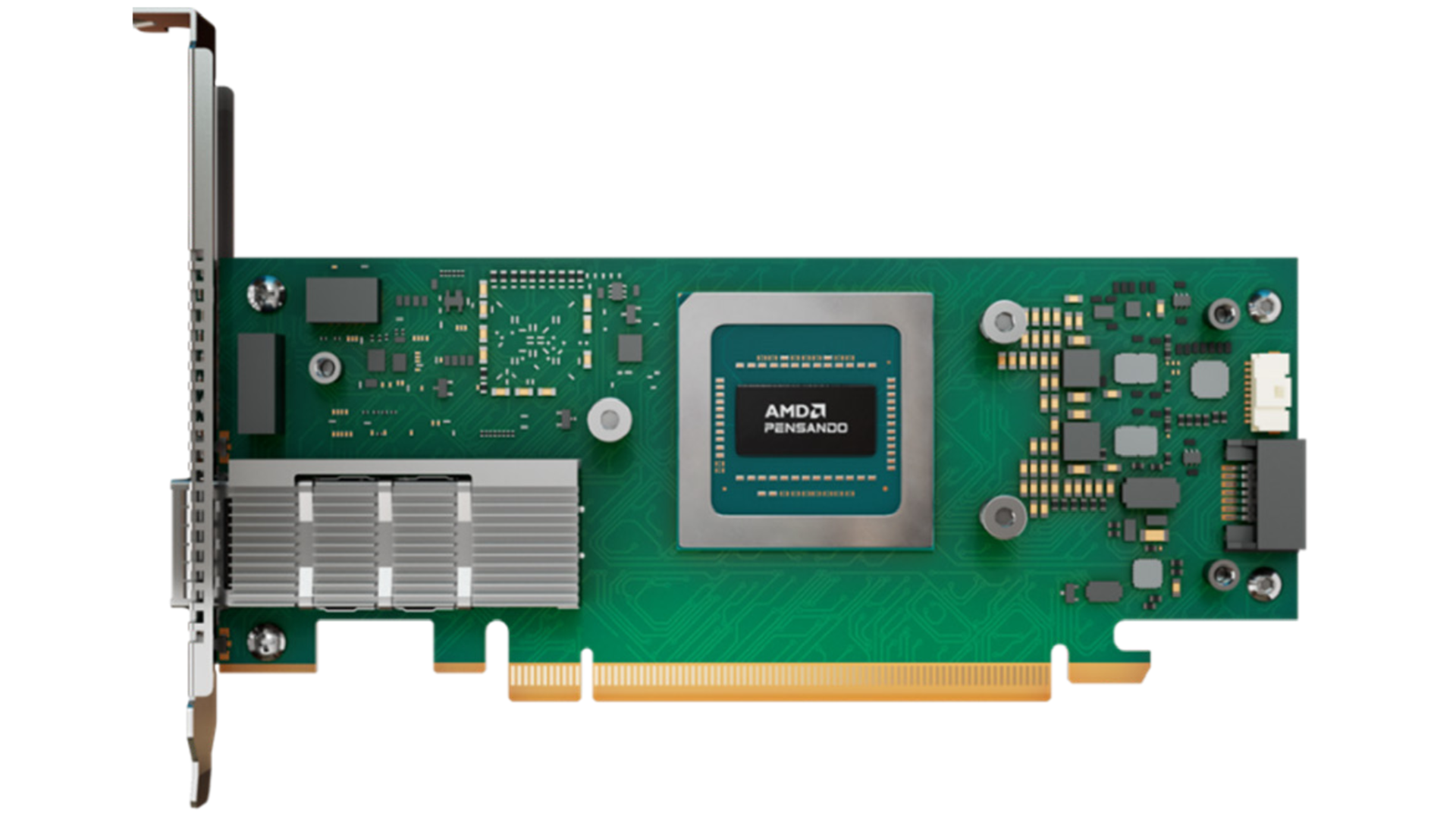

AMD is looking to enhance AI networking and infrastructure with two new products, the Pensando Salina 400 DPU and the Pensando Pollara 400 AI NIC. Building to up the efficiency of AI networking and enabling them to grow; the new chips are AMD's way of addressing the growing demands of hype scalers and organizations seeking to optimize their AI and high-performance computing (HPC) systems. It can handle the increasingly complex data flows involved in AI infrastructure, increasing how well both CPUs and accelerators perform.

The AMD Pensando Salina 400 DPU (Data Processing Units) is the third generation of the chips and has major performance improvements with double the performance and bandwidth of its last iteration. That helps it handle massive AI data loads, transfer and the processing speeds necessary for high-efficiency businesses. The Salina 400 DPU manages everything from software-defined networking (SDN), firewalls, and encryption to load balancing and network address translation (NAT). It also helps with storage offloading to free up CPU resources.

Pensando Pollara

On the back end, Athe Pensando Pollara 400 AI NIC (network interface card) is a networking card designed specifically to support the complex data transfer needs of AI and HPC systems. This new NIC is the first in to be Ultra Ethernet Consortium (UEC) ready, according to AMD, and promises up to six times the performance boost for AI workloads compared to its predecessors.

The Pollara 400 NIC uses a specialized processor to optimize AI networking. It incorporates intelligent multipathing to adjust data flows across multiple routes and avoids congestion. It also relies on path-aware congestion control to reroute data away from congested pathways and keep the network running at optimal speeds. The NIC also uses fast failover capabilities so it can detect and bypass network failures more quickly, thereby keeping communication between GPUs going at high levels.

The scalabilty of the Pollara 400 NIC is also notable as it can manage large networks of AI clusters without increasing latency. That makes for a more reliable AI infrastructure, a key term for businesses who want to use AI in their operations.

The Pollara 400 NIC also meets the specifications of the Ultra Ethernet Consortium (UEC), a growing organization working to better adapt traditional Ethernet technology for AI and HPC workloads. There are now 97 members in the UEC, making it a major player in the networking industry. The UEC is expected to release its 1.0 specification next year, just as the Pollara 400 NIC becomes commercially available. The specification will build on existing Ethernet standards, reusing much of the original technology. AI and HPC workloads will have different profiles to match their different needs, but by creating separate protocols, the UEC can raise performance and still make sure Ethernet technology remains viable.

As AI continues to play an increasingly critical role in a growing number of industries, the demand for high-speed, reliable networking infrastructure to fit will rise in tandem. AMD’s latest offerings show how keen the company is to be at the forefront of this evolution, even as the AI needs of businesses evolve and expand.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

More from TechRadar Pro

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.