Hey Presto! Nvidia pulls software hack out of AI hat and doubles performance of H100 GPU for free

TensorRT-LLM open source update features a revolutionary new processing technique

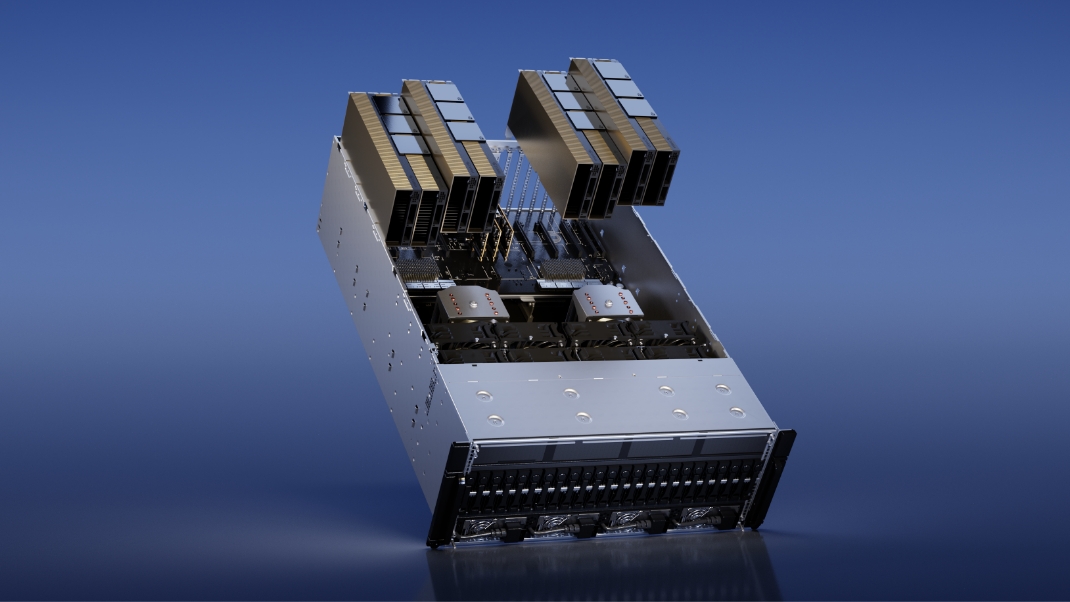

Nvidia is banding together with a list of tech partners on a game-changing piece of software that’s set to double the performance of its flagship H100 Tensor Core GPUs.

The open source TensorRT-LLM update, which is set for release in the coming weeks, sees an up-to-date system outperform the A100 by eightfold, whereas H100s would previously outperform the A100 by just fourfold. This was tested on the GPT-J 6B, a model that’s used to summarise articles from CNN and Daily Mail.

When tested on Meta’s Llama2 LLM, TensorRT-LLM-powered H100s outperformed A100s by 4.6 times – versus 2.6 times before the update.

Nvidia H100s faster than ever

The versatility and dynamism of large language models (LLMs) can make it difficult to batch requests and execute them in parallel, which means some requests finish much earlier than others.

To solve this, Nvidia and its partners embedded TensorRT-LLM with a more powerful scheduling technique called in-flight batching. This takes advantage of the fact text generation can be broken down into multiple subtasks.

Put simply, instead of waiting for an entire batch of tasks from one request to finish before moving on to the next request, the system can continue processing new batches from different requests in parallel.

TensorRT-LLM comprises a TensorRT deep learning compiler and includes optimized kernels, pre-processing and post-processing steps, as well as multi-GPU and multi-node communication primitives.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

The result? Groundbreaking performance on Nvidia’s GPUs paving the way for new large language model experimentation, quick customization, and peak performance.

This software uses tensor parallelism, in which individual weight matrices are split across devices, in turn, allowing efficient inference at scale; each model runs in parallel across multiple GPUs and across multiple servers.

TensorRT-LLM also includes fully optimized and read-to-run versions of popular LLMs including Llama 2, GPT-2 and GPT-3, as well as Falcon, Mosaic MPT, BLOOM, and dozens of others. These can be accessed through a Python API.

The update is available in early access, and will soon be integrated into the Nvidia NeMo framework, which is part of Nvidia AI Enterprise. Researchers can access this through the NeMo framework, the NGC portal, or through the source repository on GitHub.

More from TechRadar Pro

Keumars Afifi-Sabet is the Technology Editor for Live Science. He has written for a variety of publications including ITPro, The Week Digital and ComputerActive. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. In his previous role, he oversaw the commissioning and publishing of long form in areas including AI, cyber security, cloud computing and digital transformation.