Inspired by the human brain — how IBM's latest AI chip could be 25 times more efficient than GPUs by being more integrated — but neither Nvidia nor AMD have to worry just yet

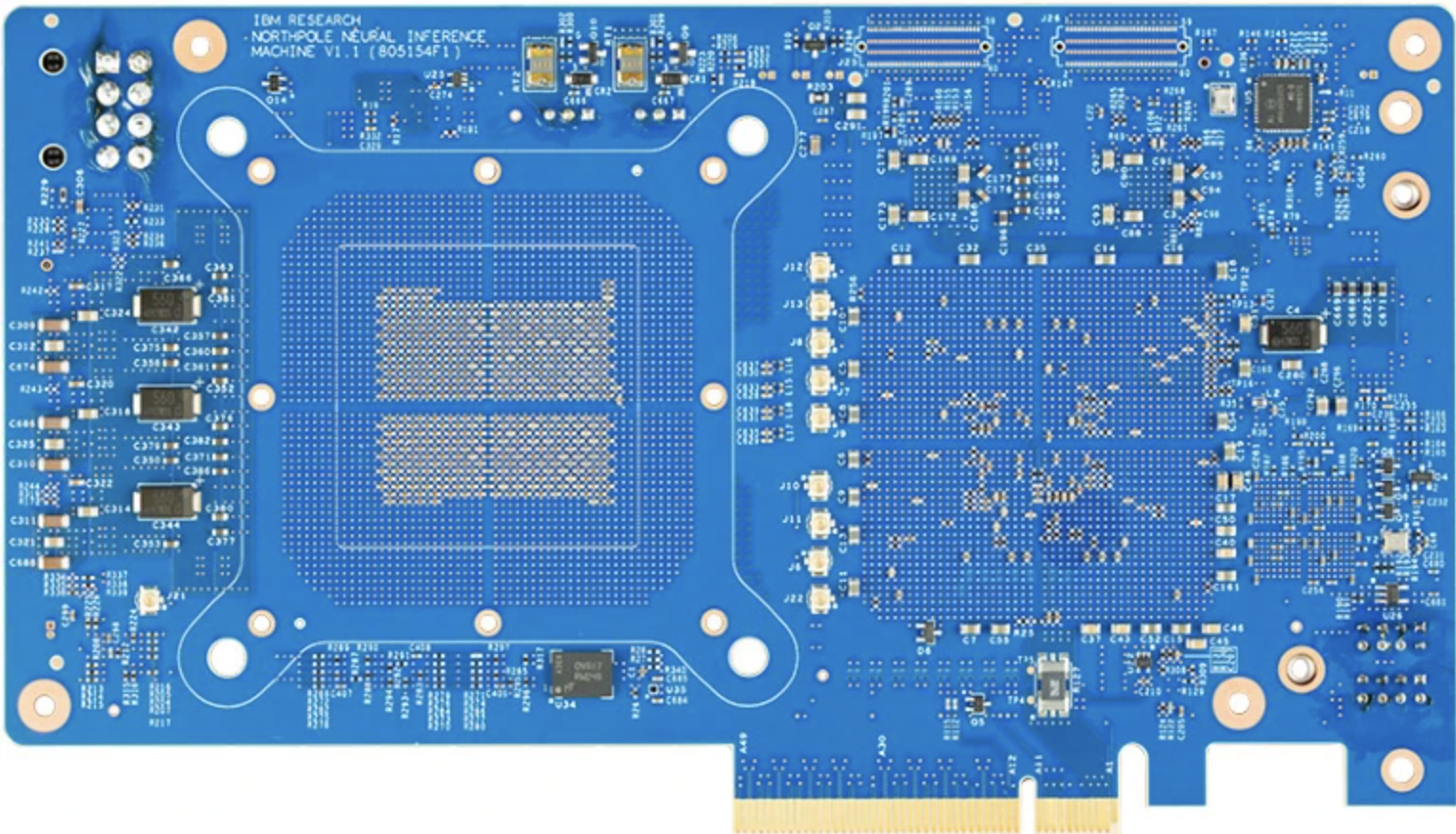

The NorthPole processor does away with RAM to ramp up speed

Researchers have developed a processor built on neural networks that can perform AI tasks so much quicker than conventional chips by removing the need to access external memory.

Even the best CPUs hit bottlenecks when processing data because calculations need to use RAM, with this shuttling of data back and forth creating inefficiencies. IBM is hoping to solve what is known as the Von Neumann bottleneck with its NorthPole chip, according to Nature.

The NorthPole processor embeds a small amount of memory into each of its 256 cores, which are connected together in a way similar to the way parts of the brain are connected together with white matter. This means the chip mitigates the bottleneck entirely.

Seeking inspiration from the human brain

IBM’s NorthPole is more of a proof of concept than a fully functioning chip that can compete with the likes of AMD and Nvidia. It only includes 224MB of RAM, for example, which is nowhere near enough the scale required for AI or to run large language models (LLMs).

The chip can also just run pre-programed neural networks trained on separate systems. But its unique architecture means the real standout is the energy efficiency it can boast. The researchers claim that if NorthPole was created today with state-of-the-art manufacturing standards, it would be 25 times more efficienct than the best GPUs and best CPUs.

“Its energy efficiency is just mind-blowing,” said Damien Querlioz, a nanoelectronics researcher at the University of Paris-Saclay in Palaiseau, according to Nature. “The work, published in Science, shows that computing and memory can be integrated on a large scale, he says. “I feel the paper will shake the common thinking in computer architecture.”

It can also outpace AI systems in tasks such as image recognition. Its neural network architecture means a bottom layer takes in data, such as the pixels in an image, and subsequent layers begins detecting patterns that become more complex as information is passed from one layer to the next. The uppermost layer then outputs the end result, such as suggesting whether an image contains a particular object.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

More from TechRadar Pro

- These are the best processors from the likes of AMD and Intel

- Check out our roundup of the fastest CPUs

- Nvidia, beware! IBM has a new analog AI chip that could give the H100 a run for its money

Keumars Afifi-Sabet is the Technology Editor for Live Science. He has written for a variety of publications including ITPro, The Week Digital and ComputerActive. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. In his previous role, he oversaw the commissioning and publishing of long form in areas including AI, cyber security, cloud computing and digital transformation.