'It is obscenely fast' — Biggest rival to Nvidia demos million-core super AI inference chip that obliterates the DGX100 with 44GB of super fast memory and you can even try it for free

Cerebras is offering "instant" AI

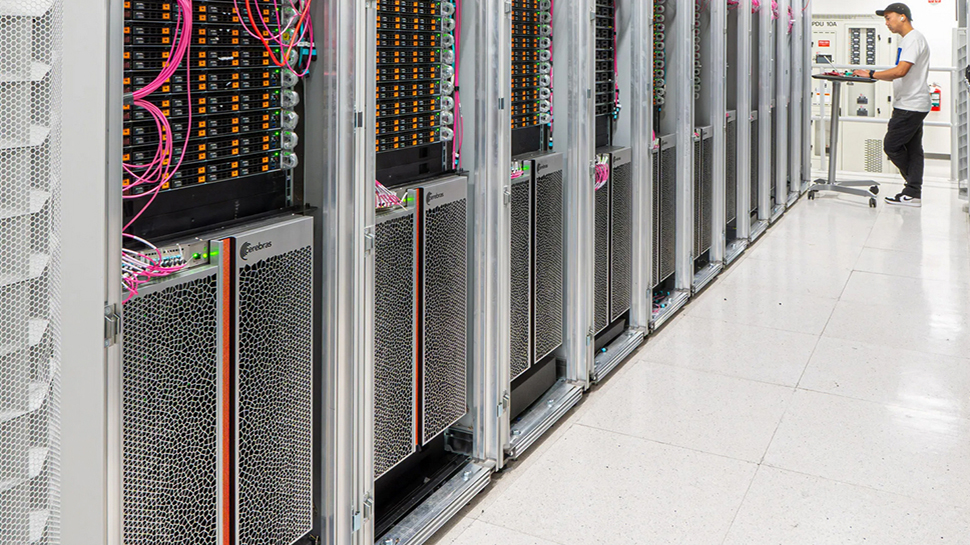

Cerebras has unveiled its latest AI inference chip, which is being touted as a formidable rival to Nvidia’s DGX100.

The chip features 44GB of high-speed memory, allowing it to handle AI models with billions to trillions of parameters.

For models that surpass the memory capacity of a single wafer, Cerebras can split them at layer boundaries, distributing them across multiple CS-3 systems. A single CS-3 system can accommodate 20 billion parameter models, while 70 billion parameter models can be managed by as few as four systems.

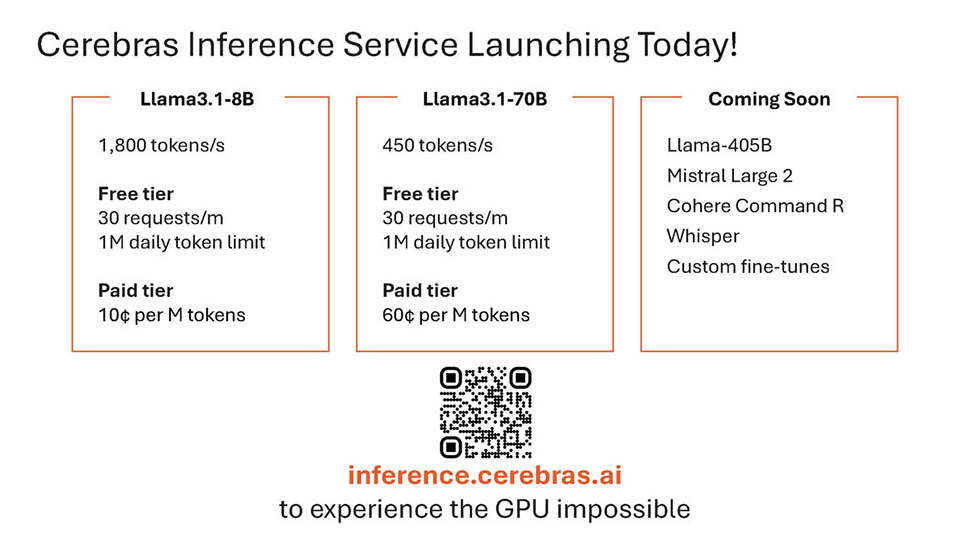

Additional model support coming soon

Cerebras emphasizes the use of 16-bit model weights to maintain accuracy, contrasting with some competitors who reduce weight precision to 8-bit, which can degrade performance. According to Cerebras, its 16-bit models perform up to 5% better in multi-turn conversations, math, and reasoning tasks compared to 8-bit models, ensuring more accurate and reliable outputs.

The Cerebras inference platform is available via chat and API access, and designed to be easily integrated by developers familiar with OpenAI’s Chat Completions format. The platform boasts the ability to run Llama3.1 70B models at 450 tokens per second, making it the only solution to achieve instantaneous speed for such large models. For developers, Cerebras is offering 1 million free tokens daily at launch, with pricing for large-scale deployments said to be significantly lower than popular GPU clouds.

Cerebras is initially launching with Llama3.1 8B and 70B models, with plans to add support for larger models like Llama3 405B and Mistral Large 2 in the near future. The company highlights that fast inference capabilities are crucial for enabling more complex AI workflows and enhancing real-time LLM intelligence, particularly in techniques like scaffolding, which requires substantial token usage.

Patrick Kennedy from ServeTheHome saw the product in action at the recent Hot Chips 2024 symposium, noting, “I had the opportunity to sit with Andrew Feldman (CEO of Cerebras) before the talk and he showed me the demos live. It is obscenely fast. The reason this matters is not just for human to prompt interaction. Instead, in a world of agents where computer AI agents talk to several other computer AI agents. Imagine if it takes seconds for each agent to come out with output, and there are multiple steps in that pipeline. If you think about automated AI agent pipelines, then you need fast inferencing to reduce the time for the entire chain.”

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Cerebras positions its platform as setting a new standard in open LLM development and deployment, offering record-breaking performance, competitive pricing, and broad API access. You can try it out by going to inference.cerebras.ai or by scanning the QR code in the slide below.

More from TechRadar Pro

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.