Meta's new AI card is one step to reduce its reliance on Nvidia's GPUs — despite spending billions on H100 and A100, Facebook's parent firm sees a clear path to an RTX-free future

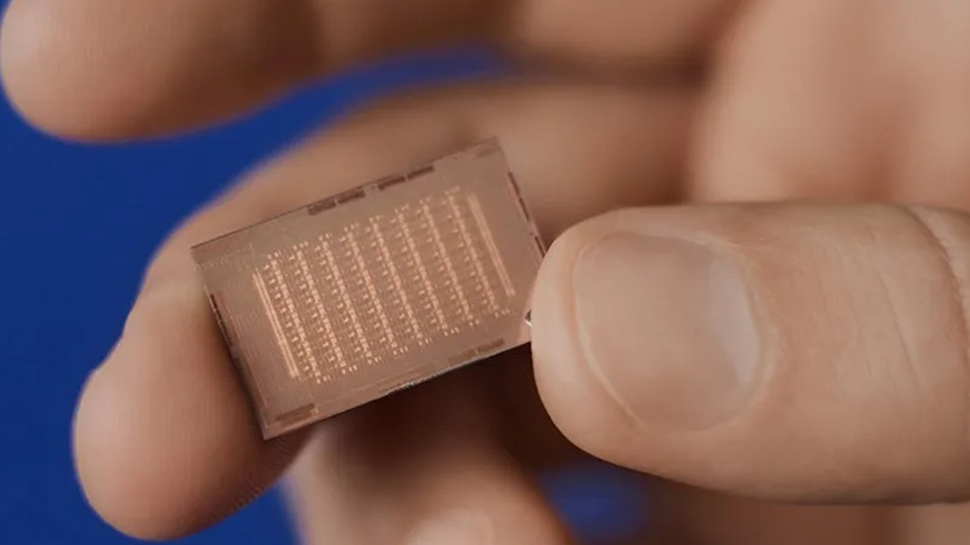

The company has lifted the lid on its next Meta Training and Inference Accelerator (MTIA) chip

Meta recently unveiled details on the company’s AI training infrastructure, revealing that it currently relies on almost 50,000 Nvidia H100 GPUs to train its open source Llama 3 LLM.

Like a lot of major tech firms involved in AI, Meta wants to reduce its reliance on Nvidia’s hardware and has taken another step in that direction.

Meta already has its own AI inference accelerator, Meta Training and Inference Accelerator (MTIA), which is tailored for the social media giant's in-house AI workloads, especially those improving experiences across its various products. The company has now shared insights about its second-generation MTIA, which significantly improves upon its predecessor.

Software stack

This revamped version of MTIA, which can handle inference but not training, doubles the compute and memory bandwidth of the past solution, maintaining the close tie-in with Meta's workloads. It is designed to efficiently serve ranking and recommendation models that deliver suggestions to users. The new chip architecture aims to provide a balanced mix of compute power, memory bandwidth, and memory capacity to meet the unique needs of these models. The architecture enhances SRAM capability, enabling high performance even with reduced batch sizes.

The latest Accelerator consists of an 8x8 grid of processing elements (PEs) offering a dense compute performance 3.5 times greater and a sparse compute performance that's reportedly seven times better than MTIA v1. The advancement stems from optimizations in the new architecture around the pipelining of sparse compute, as well as how data is fed into the PEs. Key features include triple the size of local storage, double the on-chip SRAM and a 3.5X increase in its bandwidth, and double the LPDDR5 capacity.

Along with the hardware, Meta is also focusing on co-designing the software stack with the silicon to synergize an optimal overall inference solution. The company says it has developed a robust, rack-based system that accommodates up to 72 accelerators, designed to clock the chip at 1.35GHz and run it at 90W.

Among other developments, Meta says it has also upgraded the fabric between accelerators, increasing the bandwidth and system scalability significantly. The Triton-MTIA, a backend compiler built to generate high-performance code for MTIA hardware, further optimizes the software stack.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

The new MTIA won't have a massive impact on Meta's roadmap towards a future less reliant on Nvidia's GPUs, but it is another step in that direction.

More from TechRadar Pro

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.