Nvidia unveils upgraded chip to power the next gen of AI

Nvidia extends its AI hardware pedigree with new Grace Hopper superchip

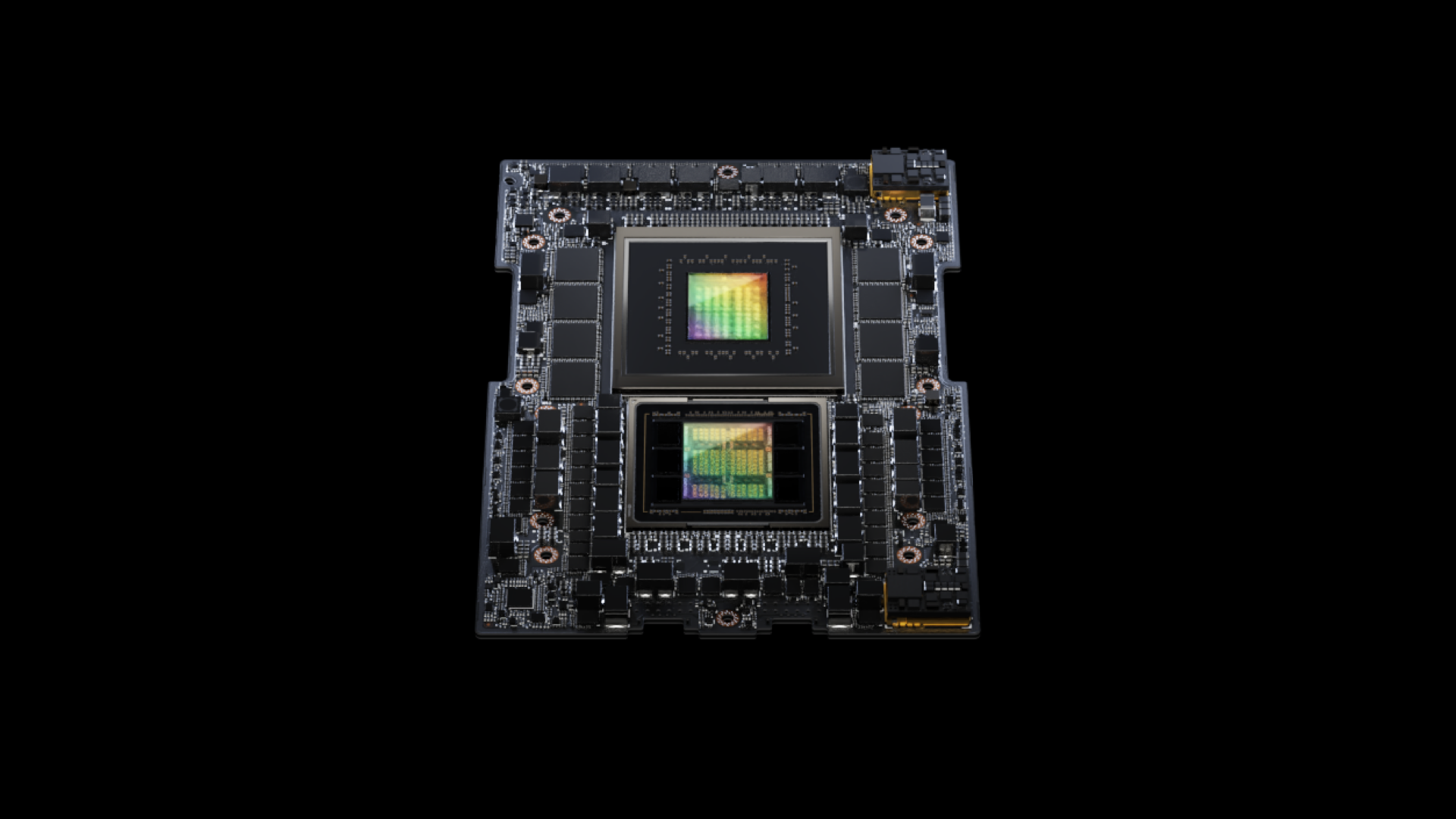

Nvidia has announced its next generation AI chip platform which uses the new Grace Hopper Superchip with the world’s first HBM3e processor.

The new NVIDIA GH200 Grace Hopper platform is designed for heavy generative AI workloads, such as Large Language Models in the vain of ChatGPT. Nvidia also mentions other AI applications that it can handle, such as recommender systems and vector databases.

The dual configuration offers 3.5x more memory and 3x more bandwidth than the current generation. It features a single server with 144 Arm Neoverse cores, eight petaflops of AI performance and 282GB of HBM3e memory technology.

The next generation

Nvidia CEO Jensen Huang commented that the upgrades, "improve throughput, [add] the ability to connect GPUs to aggregate performance without compromise, and [feature] a server design that can be easily deployed across the entire data center."

The Grace Hopper Superchip can be connected to other Superchips via Nvidia NVLink, to increase computing power needed deploy the sizeable models of generative AI.

With this kind of connection, the GPU has full access to the CPU memory, which provides a combined 1.2TB of memory in dual configuration mode.

The new HBM3e memory is 50% faster than HBM3, with a total of 10TB/sec of combined bandwidth, so it can run models that are 3.5x larger. Performance is also enhanced thanks to the 3x greater bandwidth too.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

The new Grace Hopper Superchip platform with HBM3e is fully compatible with the Nvidia MGX server specification, which allows for any manufacturer to add Grace Hopper into over a hundred server variations in a fast and cost-effective manner, claims the company.

The news signals Nvidia's continued dominance in the AI hardware space, as its A100 GPUs were used to power the machines behind ChatGPT, the infamous chatbot that kickstarted to the brave new world of advanced automated computing. In then followed this up with its successor, the H100.

Nvidia also says that it expects system makers to make the first models based on Grace Hopper in Q2 of 2024.

- Got heavy workloads of your own? These are the best workstations you can get right now

Lewis Maddison is a Reviews Writer for TechRadar. He previously worked as a Staff Writer for our business section, TechRadar Pro, where he gained experience with productivity-enhancing hardware, ranging from keyboards to standing desks. His area of expertise lies in computer peripherals and audio hardware, having spent over a decade exploring the murky depths of both PC building and music production. He also revels in picking up on the finest details and niggles that ultimately make a big difference to the user experience.