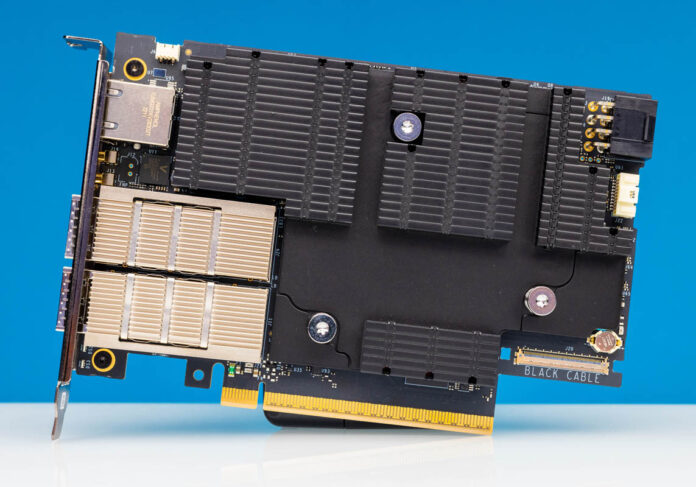

Nvidia’s BlueField-3 SuperNIC morphs into a special self-hosted storage powerhouse with an 80GBps memory boost and PCIe-ready architecture

This self-hosted model allows direct integration with NVMe SSDs and GPUs

- Nvidia unveils upgraded BlueField-3 DPUs

- New editions allow flexible storage server configurations

- BlueField-3 DPU offloads CPU tasks, and reduces latency for storage-heavy environments

Nvidia has revealed a new iteration of its BlueField-3 Data Processing Unit (DPU), that is not just a regular SuperNIC, but a self-hosted model mainly for storage.

The new offering greatly increases memory bandwidth compared to its predecessors, as while the BlueField-2 DPU utilized a single-channel design, resulting in lower memory bandwidth than the first generation, the BlueField-3 boasts dual 64-bit DDR5-5600 memory interfaces.

This upgrade translates to 80GB of bandwidth, enabling faster data processing and efficiency, particularly for applications which rely on high-speed data access.

Self-hosted solutions for storage applications

The special version, classified as B3220SH, also it introduces advanced capabilities for direct hardware connections. With its ability to expose PCIe roots, this model enables direct integration with NVMe SSDs and GPUs, bypassing the need for an external CPU.

This capability allows for greater flexibility in configuring storage solutions without relying on traditional x86 or Arm CPUs, enabling a more streamlined architecture for storage servers. The integration of a PCIe switch further enhances this model's functionality by allowing multiple devices to be connected seamlessly. This architecture not only simplifies data flow, it also reduces latency and improves the overall performance in storage-intensive applications.

The versatility of the BlueField-3 extends beyond storage, as its architecture supports various applications across sectors such as high-performance computing (HPC) and artificial intelligence (AI). The new model can offload tasks from CPUs, it frees up valuable processing resources for revenue-generating workloads.

You might also like

- AMD takes the AI networking battle to Nvidia with new DPU launch

- Microsoft dips toes into DPU with Azure Boost

- Could clever hardware hack be behind DeepSeek's groundbreaking AI efficiency?

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Efosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking. Efosa developed a keen interest in technology policy, specifically exploring the intersection of privacy, security, and politics. His research delves into how technological advancements influence regulatory frameworks and societal norms, particularly concerning data protection and cybersecurity. Upon joining TechRadar Pro, in addition to privacy and technology policy, he is also focused on B2B security products. Efosa can be contacted at this email: udinmwenefosa@gmail.com

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.