Surviving Google’s JavaScript rendering shift: one month later

Google Search's newest requirement aims to "better protect" users

Google’s new mandate that all search requests must be rendered with JavaScript has sent shockwaves through the scraping community. In the past month, many legacy tools stumbled under the JS wall while forward-thinking platforms swiftly adapted to the new requirements. The industry is rethinking its infrastructure, and finding the right web data collection solution might become even harder.

As a security measure, Google Search's newest requirement aims to "better protect" users against malicious activity, such as bots and spam, and improve the overall experience on Google Search. But what’s the real impact users felt?

Partnerships & Performance Marketing Lead, Smartproxy.

What happened?

On January 15, 2025, Google flipped the switch on a major update: every search query now requires JavaScript to be displayed. No more simple HTTP requests and plain HTML parsing – the days of old-school scraping are over. Instead, if your bot doesn’t "speak" JavaScript, you’ll be greeted by a block page the industry started to call the JS wall.

Google claims the change is about better-protecting users and preventing abuse, but the impact goes much deeper. While the change might affect only a fraction of human traffic, a significant slice of that tiny percentage comes from automated scraping scripts and SEO tools. In other words, millions of searches now require headless browsers or advanced rendering to get the data you need.

SemRush data reveals significant challenges for SEO tracking tools in providing accurate results on rankings, keyword popularity, and other crucial metrics. SERP volatility, which measures the frequency and magnitude of changes in search engine results pages, has reached unprecedented levels in the past month.

Volatility in search refers to how often and how drastically search results change for a given query. Normal volatility typically indicates minor fluctuations in rankings, while high volatility suggests major shifts in search results, often due to algorithm updates or significant changes in user behavior.

From chaos to innovative solutions

When the update hit, many scraping tools built on static HTML retrieval couldn’t successfully return results to users. Data lags, outages, and skyrocketing error rates became the norm. But as any savvy team in web data projects knows, challenges are opportunities in disguise.

Platforms like SERPrecon experienced immediate outages, failing to track keyword rankings or analyze SERP accurately due to the lack of JavaScript rendering support. It took quite a while for the platform to provide its users with real-time data, but they’ve successfully integrated JavaScript rendering capabilities in a few days.

Surge of headless browsers

Scrapers that once relied solely on basic HTTP clients have had to evolve. Switching to headless browsers like Playwright or Selenium isn’t just a nice-to-have – it’s now essential. These tools mimic real-user behavior by fully executing JavaScript, ensuring that every dynamic element is captured.

However, there’s a catch: headless browsers are resource-intensive. They require more CPU and memory, leading to higher operational costs.

"My experience is that headless browsers use about 100x more RAM and at least 10x more bandwidth and processing power. It’s a significant increase in resource usage." – a user on the HackerNews forum.

Advanced scraping solutions

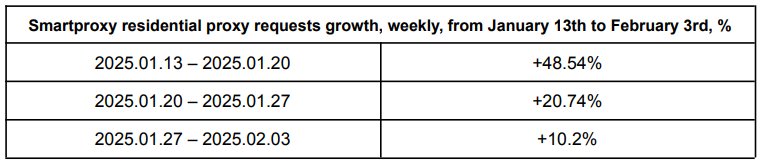

This industry-wide shakeup has also accelerated the adoption of robust proxy and web data collection solutions. High-quality residential and ISP proxies have become critical components in any scalable scraping operation. By rotating millions of IPs across different locations, scraping tools can avoid detection and maintain a steady data flow, even when every page insists on being rendered.

For teams looking for a ready-made scraping infrastructure, scraping APIs are stepping up. These solutions integrate headless browser technology and advanced proxy rotation out of the box, offering a reliable, time-saving, and scalable alternative to custom-built scrapers.

The cost and complexity trade-off

For many, switching to JavaScript rendering has meant re-engineering the entire scraping stack. Here are a few key insights from the past month:

- Higher costs yet more accurate data. The computational overhead of running headless browsers means higher hosting and maintenance expenses. However, the trade-off is worth it for users who need accurate, real-time data.

- Infrastructure overhaul. Legacy systems that relied on lightweight HTTP clients have either been upgraded or phased out. This round's winners are those who pivoted quickly, adopting dynamic rendering solutions and investing in scalable solutions.

- New competitive dynamics. While some SEO tools and scraping services have struggled with outages and data lags, a few players have managed to maintain uptime by anticipating the change. Their secret? A blend of headless browser tech and resilient proxy infrastructure.

What does the future hold?

Google’s decision to require JavaScript isn’t a temporary hiccup – it’s a sign of things to come. Here are our predictions for the next phase of the scraping revolution:

- Rise of unified APIs. Expect more services to offer fully integrated, headless browser–based scraping solutions. These platforms will remove the complexity of data collection and let users focus on what matters – real-time, accurate data.

- Growing proxy adoption. As more individuals and businesses face automation limitations, the need for high-quality proxies will remain at the heart of any scalable scraping solution.

- Advanced scraping techniques. We’ll see more sophisticated methods for rendering, throttling, and mimicking human behavior, helping automated tools keep pace with Google’s ever-evolving anti-bot defenses.

"Google isn’t really stopping bots; it’s just forcing the switch to a new, JavaScript-driven experience that – whether you like it or not – supports their AI-powered enhancements." – a user on the HackerNews forum.

Bottom line

A month after the rollout, it’s clear that the Google JavaScript rendering requirement has reshaped the web scraping landscape. While the immediate fallout included outages and data lags, forward-thinking providers have adapted – and many are now better equipped than ever before.

If you’re still using outdated scraping methods, it might be time to consider a switch. To keep your data flowing, embrace headless browsers, invest in quality proxies, and consider robust scraping solutions.

We've listed the best fest free proxies.

This article was produced as part of TechRadarPro's Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Partnerships & Performance Marketing Lead, Smartproxy.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.