Tech startup proposes a novel way to tackle massive LLMs using the fastest memory available to mankind

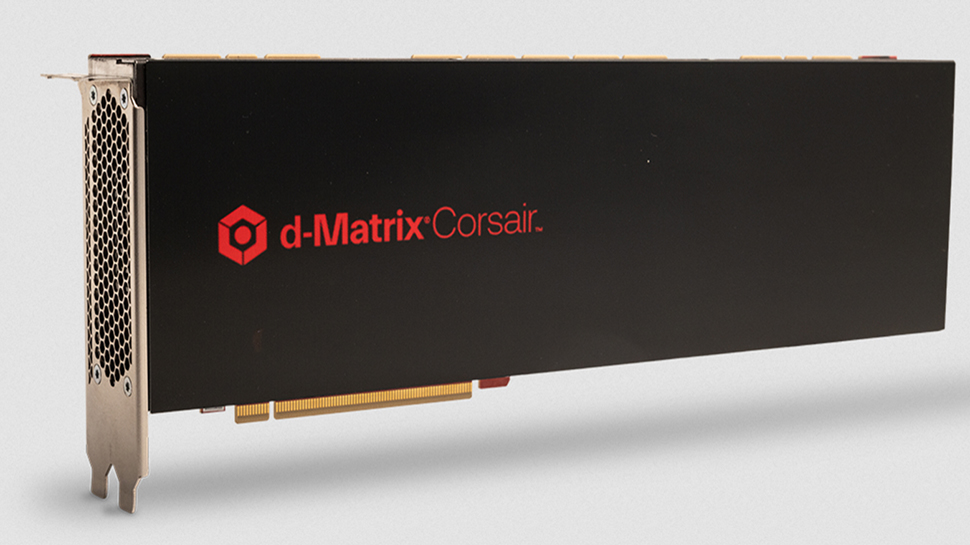

Microsoft-backed d-Matrix's Corsair PCIe card has 2GB of SRAM performance memory

- GPU-like PCIe card offers 10PFLOPs FP4 compute power and 2GB of SRAM

- SRAM is usually used in small amounts as cache in processors (L1 to L3)

- It also uses LPDDR5 rather than far more expensive HBM memory

Silicon Valley startup d-Matrix, which is backed by Microsoft, has developed a chiplet-based solution designed for fast, small-batch inference of LLMs in enterprise environments. Its architecture takes an all-digital compute-in-memory approach, using modified SRAM cells for speed and energy efficiency.

The Corsair, d-Matrix’s current product, is described as the “first-of-its-kind AI compute platform” and features two d-Matrix ASICs on a full-height, full-length PCIe card, with four chiplets per ASIC. It achieves a total of 9.6 PFLOPs FP4 compute power with 2GB of SRAM-based performance memory. Unlike traditional designs that rely on expensive HBM, Corsair uses LPDDR5 capacity memory, with up to 256GB per card for handling larger models or batch inference workloads.

d-Matrix says Corsair delivers 10x better interactive performance, 3x energy efficiency and 3x cost-performances compared with GPU alternatives, such as the hugely popular Nvidia H100.

A leap of faith

Sree Ganesan, head of product at d-Matrix, told EE Times, "Today’s solutions mostly hit the memory wall with existing architectures. They have to add a lot more compute and burn a lot more power, which is an unsustainable path. Yes, we can do better with more compute FLOPS and bigger memory, but d-Matrix has focused on memory bandwidth and innovating on the memory-compute barrier."

d-Matrix’s approach eliminates the bottleneck by enabling computation directly within memory.

"We’ve built a digital in-memory compute core where multiply-accumulate happens in memory and you can take advantage of very high bandwidth - we’re talking about 150 terabytes per second," Ganesan explained. "This, in combination with the series of other innovations allows us to solve the memory wall challenge."

CEO Sid Sheth told EE Times the company was founded in 2019 after feedback from hyperscalers suggested inference was the future. “It was a leap of faith, because inference alone as an opportunity was not perceived as being too big back in 2019,” he said. “Of course, that all changed post 2022 and ChatGPT. We also bet on transformer [networks] pretty early on in the company.”

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Corsair is entering mass production in Q2 2025, and d-Matrix is already planning its next-generation ASIC, Raptor, which will integrate 3D-stacked DRAM to support reasoning workloads and larger memory capacities.

You might also like

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.