What is AI quantization?

Quantization makes huge models smaller and more usable for general purposes

Quantization is a method of reducing the size of AI models so they can be run on more modest computers.

The challenge is how to do this while still retaining as much of the model quality as possible, in other words to prevent response errors or hallucinations.

By shrinking the size with this technique, the models can be deployed on many more computing devices, such as home PCs, smartphones and even tiny appliances.

Major generative models like OpenAI's GPT, Google's Gemini, and Anthropic’s Claude range are massive data structures which operate using billions or even trillions of parameters.

The reason they need so much power is to cope with a wide range of general purpose applications.

A big clue is the fact that the G in AGI refers to ‘general’ intelligence, because these foundation models have to cope with anything from school homework to advanced scientific calculus.

But there’s a cost to all this power, as you might expect.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

These massive models need huge compute resources in order to run - it’s no exaggeration to say they can require data centers the size of a small village, and need energy systems to match.

Quantization is one of the key ways we can reduce those demands, and tailor models for more widespread needs.

How does it work?

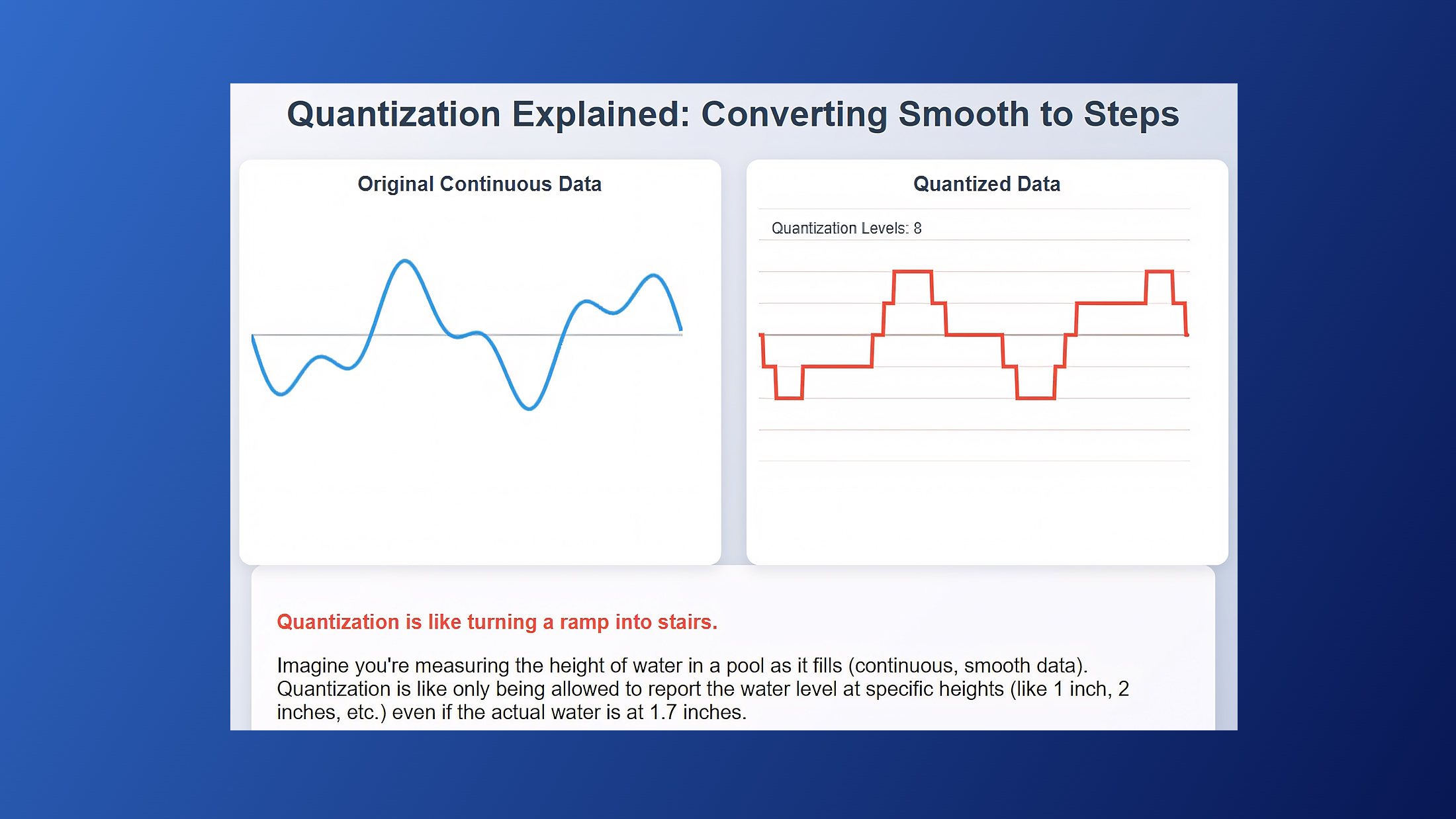

Quantization basically reduces the precision of the numbers used in a neural network.

While this sounds like we’re deliberately making it inferior, it's actually an excellent compromise.

Base models typically use 32-bit floating-point numbers (FP32) to represent the weights and biases of their parameters.

By converting these numbers to less precise formats through quantization, for example 16-bit, 8-bit or even 4-bit, we can save a huge amount of physical space on disk and also computer resource requirements.

Remember photo compression?

It’s a lot like compressing a high-resolution photo - the original RAW image might offer stunning detail, but the file size will likely be far too large to share or edit easily.

Using compression tools, we can dramatically reduce these demands, and so make the image more practical to use. Ideally we use a file compression technology like JPEG, which also minimises the loss of detail and color quality, so most people won't notice a difference.

Quantized models similarly sacrifice a small amount of accuracy in exchange for dramatic improvements in utility, size and speed.

It’s safe to say that without these substantial improvements, the world of AI models would be significantly more limited.

Big centralized AI models in huge data centers are great for flagship applications, but AI becomes so much more valuable when distributed to many systems across the globe.

And that’s before we talk about using AI on your smartphone, TV, or other older less powerful devices, all without having to connect to big cloud computers. This has enormous implications for accessibility in regions of the world with limited connectivity or computing resources.

Fun fact: While many people think of quantization as a new technology driven by the AI boom, its roots actually go back to signal processing and information theory from decades ago.

The digital music and photos we've enjoyed for decades rely on similar principles of reducing precision while retaining audio and visual quality.

Quantization techniques are becoming increasingly sophisticated as time passes, allowing even more dramatic compression with less impact on model performance.

One of the major beneficiaries of this improvement is the open source community.

Quantized versions of models like Llama, Mistral and DeepSeek are increasingly powering exciting new applications on personal computers, which would otherwise be impossibly expensive using giant cloud AI services.

Nigel Powell is an author, columnist, and consultant with over 30 years of experience in the tech industry. He produced the weekly Don't Panic technology column in the Sunday Times newspaper for 16 years and is the author of the Sunday Times book of Computer Answers, published by Harper Collins. He has been a technology pundit on Sky Television's Global Village program and a regular contributor to BBC Radio Five's Men's Hour. He's an expert in all things software, security, privacy, mobile, AI, and tech innovation.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.