Why are AI context windows important?

AI uses context to track and understand chat conversations

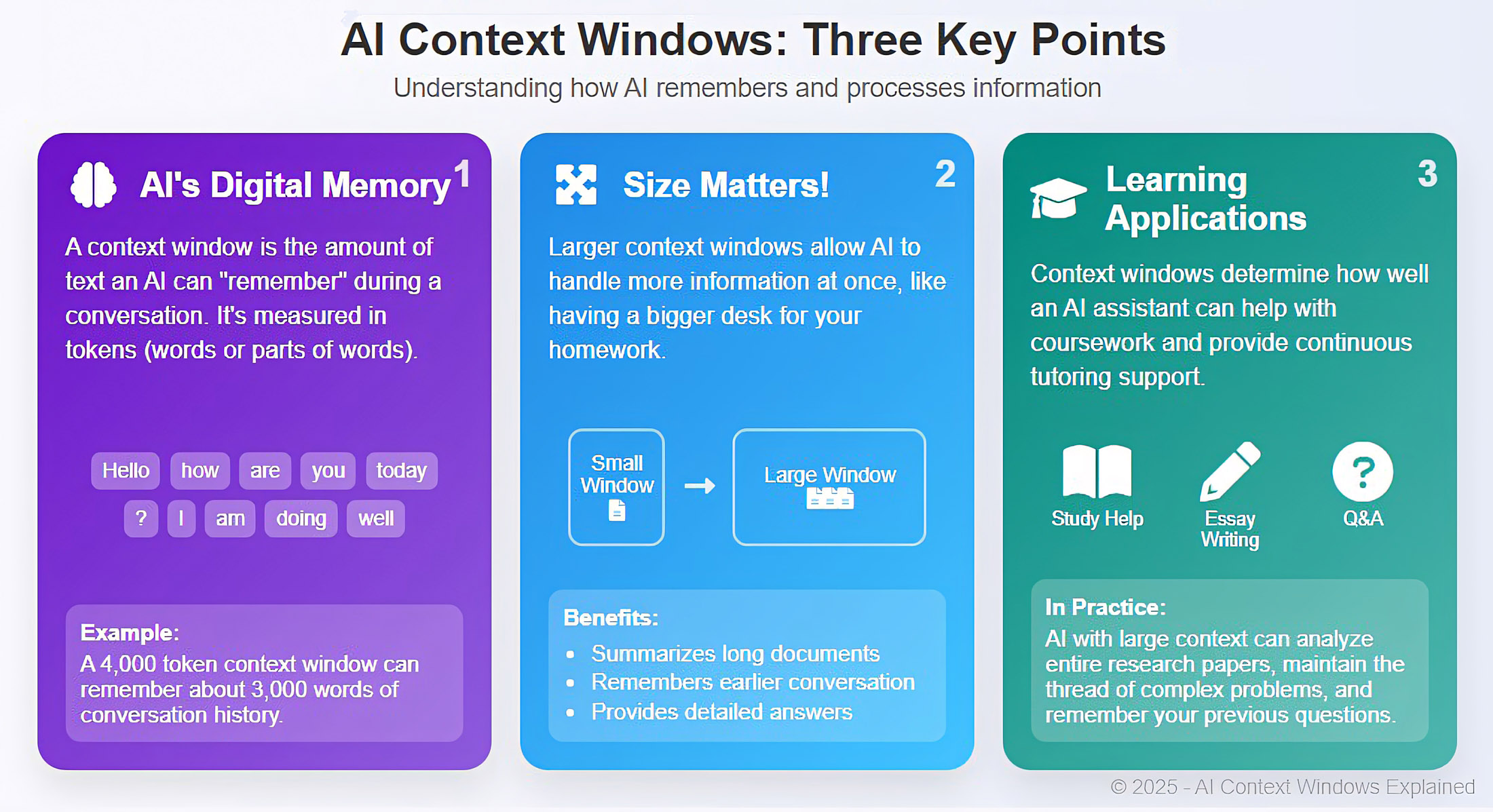

Context windows are one of the most underappreciated parts of artificial intelligence systems, particularly when related to chat interfaces.

These ‘windows’ temporarily store chat texts during our interaction with AI models.

By storing chat messages in memory, the model can maintain a consistent understanding of the overall conversation.

So, for example, an initial user request might be to find information on the population of Berlin, at which point the model will return the response.

However any follow up questions, such as the city’s best cafes, need to be connected to the previous chat request, otherwise the whole conversation grinds to a halt.

By keeping track of the conversation's history, a context window lets the AI coherently manage lengthy conversations so they flow well, and stay aligned with the overall discussion.

Context windows are measured in the total amount of tokens the model can process at any one time.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Typical windows range from 8192 tokens up to 2 million and above, in the case of Google’s Gemini AI models. It’s hard to be specific, but in general a token usually represents four characters of text in the English language.

So for instance 100 tokens would be 75 words. A common context window for today’s mainstream cloud based models is around 128,000 tokens or just over 1200 words.

The ability to keep track of long conversations is crucial to making chatbots or virtual assistants valuable in real use cases.

That’s because the size of the context window significantly influences how well the AI can can handle more complex interactions.

It’s the difference between having a normal conversation, and struggling to be understood by someone who keeps forgetting what you’re discussing. Clearly the latter would be extremely frustrating.

A larger context window also lets the model access and remember a much wider range of information during the chat, which can also aid in the model’s ability to return intelligent responses.

This ability to maintain context not only in time, but also breadth of data, is an increasingly important part of the utility of current models.

This is especially true with what are known as ‘thinking’ models, which take more time to evaluate all the options before giving a response.

Thinking has essentially replaced the old prompt practice of asking the model to specifically think ‘step by step’, but the end result is still the same.

Any aspect of AI which employs enhanced reflection or extended dialogue, inevitably requires a longer context window to cope with the additional processing demands.

Advanced models typically employ a rolling context window which adds new chat messages to the memory while dropping older messages out of the window at the other end.

This ensures that the AI can always refer to earlier parts of a conversation when dealing with new user requests.

Where a context window is too small, or the user request is so large or complex that it overflows the window, the model may return a response which is either nonsensical or hallucinates wildly.

The size of a context window is also important for web search and recommendation requests.

The general rule is the more complex the chat request, the larger the context window you need in the chosen model.

The downside of a larger context window is increased computer processing requirements, so it’s usual to only find large context windows in cloud based AI models with their huge compute resources.

Small local desktop or open source models are forced to employ smaller context windows because of the low power computers they run on.

Despite these limitations, the continuing optimization and improved capabilities of local AI models means that they will inevitably become more useful for everyday tasks over time.

Nigel Powell is an author, columnist, and consultant with over 30 years of experience in the tech industry. He produced the weekly Don't Panic technology column in the Sunday Times newspaper for 16 years and is the author of the Sunday Times book of Computer Answers, published by Harper Collins. He has been a technology pundit on Sky Television's Global Village program and a regular contributor to BBC Radio Five's Men's Hour. He's an expert in all things software, security, privacy, mobile, AI, and tech innovation.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.