xAI’s Colossus supercomputer cluster uses 100,000 Nvidia Hopper GPUs — and it was all made possible using Nvidia’s Spectrum-X Ethernet networking platform

The Colossus site was built in just 122 days

- Nvidia and xAI collaborate on Colossus development

- xAI has markedly cut down 'flow collisions' during AI model training

- Spectrum-X has been crucial in training the Grok AI model family

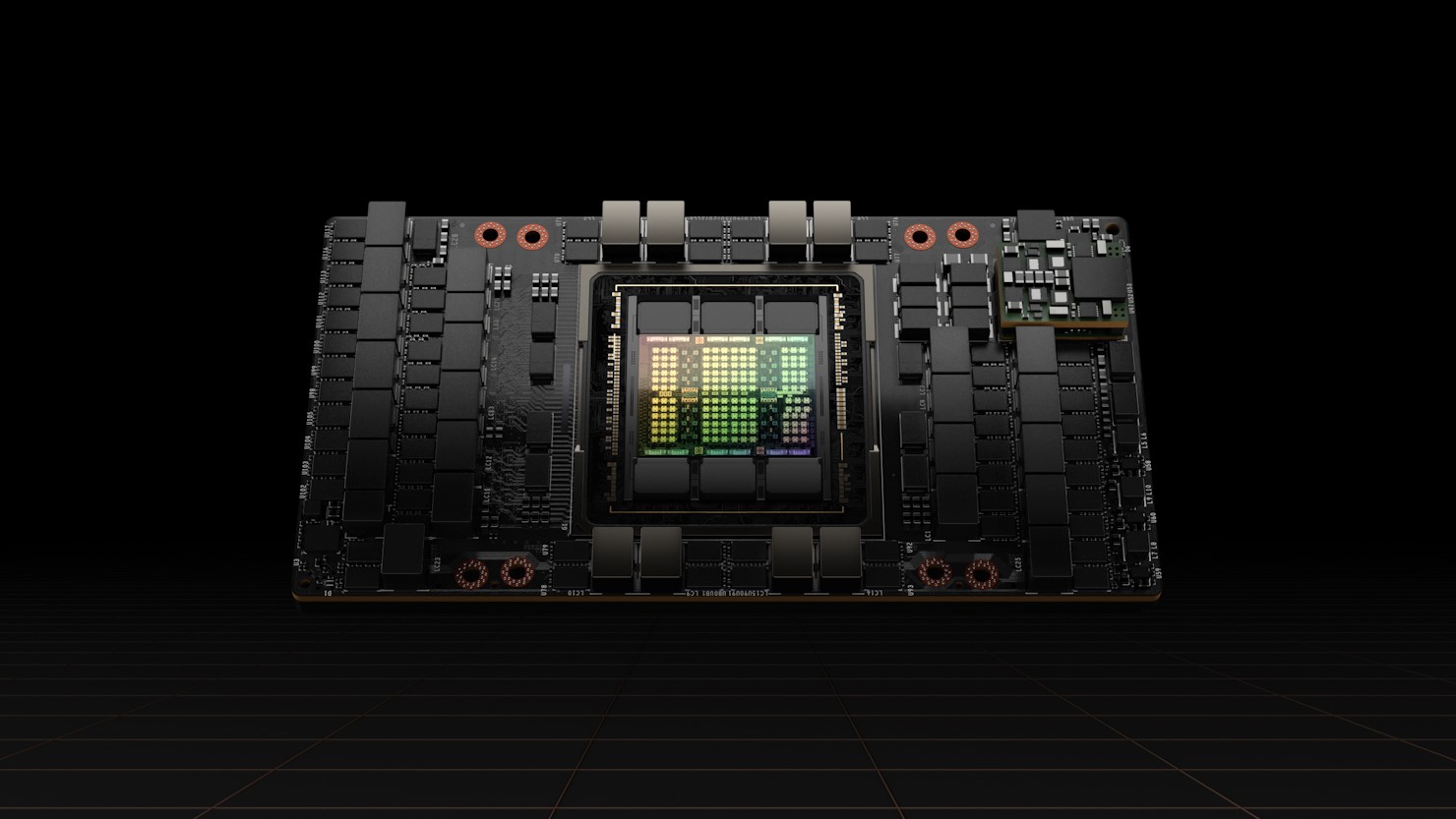

Nvidia has shed light on how xAI’s ‘Colossus’ supercomputer cluster can keep a handle on 100,000 Hopper GPUs - and it’s all down to using the chipmaker's Spectrum-X Ethernet networking platform.

Spectrum-X, the company revealed, is designed to provide massive performance capabilities to multi-tenant, hyperscale AI factories using its Remote Directory Memory Access (RDMA) network.

The platform has been deployed at Colossus, the world’s largest AI supercomputer, since its inception. The Elon Musk-owned firm has been using the cluster to train its Grok series of large language models (LLMs), which power the chatbots offered to X users.

The facility was built in collaboration with Nvidia in just 122 days, and xAI is currently in the process of expanding it, with plans to deploy a total of 200,000 Nvidia Hopper GPUs.

Training Grok takes serious firepower

The Grok AI models are extremely large, with Grok-1 measuring in as 314 billion parameters and Grok-2 outperforming Claude 3.5 Sonnet and GPT-4 Turbo at the time of launch in August.

Naturally, training these models requires significant network performance. Using Nvidia’s Spectrum-X platform, xAI recorded zero application legacy degradation or packet loss as a result of ‘flow collisions’, or bottlenecks within AI networking paths.

xAI revealed it has been able to maintain 95% data throughput enabled by Spectrum-X’s congestion control capabilities. The company added this level of performance cannot be delivered at this scale via standard Ethernet.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Using traditional Ethernet, this typically creates thousands of flow collisions while delivering only 60% data throughput, according to Nvidia.

A spokesperson for xAI said the combination of Hopper GPUs and Spectrum-X has allowed the company to “push the boundaries of training AI models” and created a “super-accelerated and optimized AI factory”

“AI is becoming mission-critical and requires increased performance, security, scalability and cost-efficiency,” said Gilad Shainer, senior vice president of networking at Nvidia.

“The NvidiaSpectrum-X Ethernet networking platform is designed to provide innovators such as xAI with faster processing, analysis and execution of AI workloads, and in turn accelerates the development, deployment and time to market of AI solutions.”

Part of the Spectrum-X platform includes the Spectrum SN5600 Ethernet switch - this supports port speeds of up to 800Gb/s and is based on the Spectrum-4 switch ASIC, according to Nvidia.

xAI opted to combine the Spectrum-X SN5600 switch with NVIDIA BlueField-3 SuperNICs for higher performance.

You might also like

- Google's super powerful Arm-based CPU is now available

- Meta is letting the US military use its Llama AI model for ‘national security applications’

- Take a look at our choices for the best AI tools around today

Ross Kelly is News & Analysis Editor at ITPro, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape.