Nuance Communications is already well known for its tech industry innovations. It’s been at the forefront of speech recognition software and has also made substantial inroads into the automotive industry. In fact, you’ll find its Dragon Drive software in more than a few cars out there on the roads. But the company also works in a stack of other business sectors including healthcare, telecommunications, financial services and even retail.

Now, though, the company is working with Affectiva, an MIT Media Lab spin-off and a leading provider of AI software that detects complex and nuanced human emotions and cognitive states from face and voice. Its patented Emotion AI technology uses machine learning, deep learning, computer vision and speech science to work its magic. So far, Affectiva has built the world’s largest emotion data repository with over 7 million faces analyzed in 87 countries.

Up until now the technology has been used to test consumer engagement with advertising campaigns, videos and TV programming. Now though, Affectiva is working with leading OEMs, Tier 1s and technology providers on next generation multi-modal driver state monitoring and in-cabin mood sensing for the automotive industry. Partnering with Nuance seems like an obvious next step considering that carmakers share a very similar vision for a smarter, safer future.

As a result, the partnership hopes to merge the power of both Nuance’s intuitive Dragon Drive package and Affectiva’s innovations to produce an enhanced automotive assistant. If all goes to plan the end result will be able to understand the cognitive and emotional states of both drivers and passengers.

It’s a very cool idea that enables artificial intelligence to measure facial expressions and emotions such as joy, anger and surprise, as well as vocal expressions of anger, engagement and laughter, in real-time and also provides key indicators of drowsiness, such as yawning and blink rates.

Affectiva says that it was demand from the automotive industry that prompted the company to start developing systems that could help improve car safety. There are certainly plenty of component part requirements that go into such a smart system, with everything from drowsiness detection through to being able to tell if a driver is distracted. Needless to say, developing a system that will work across the spectrum of driver types involves amassing an awful lot of data.

Distraction and drowsiness

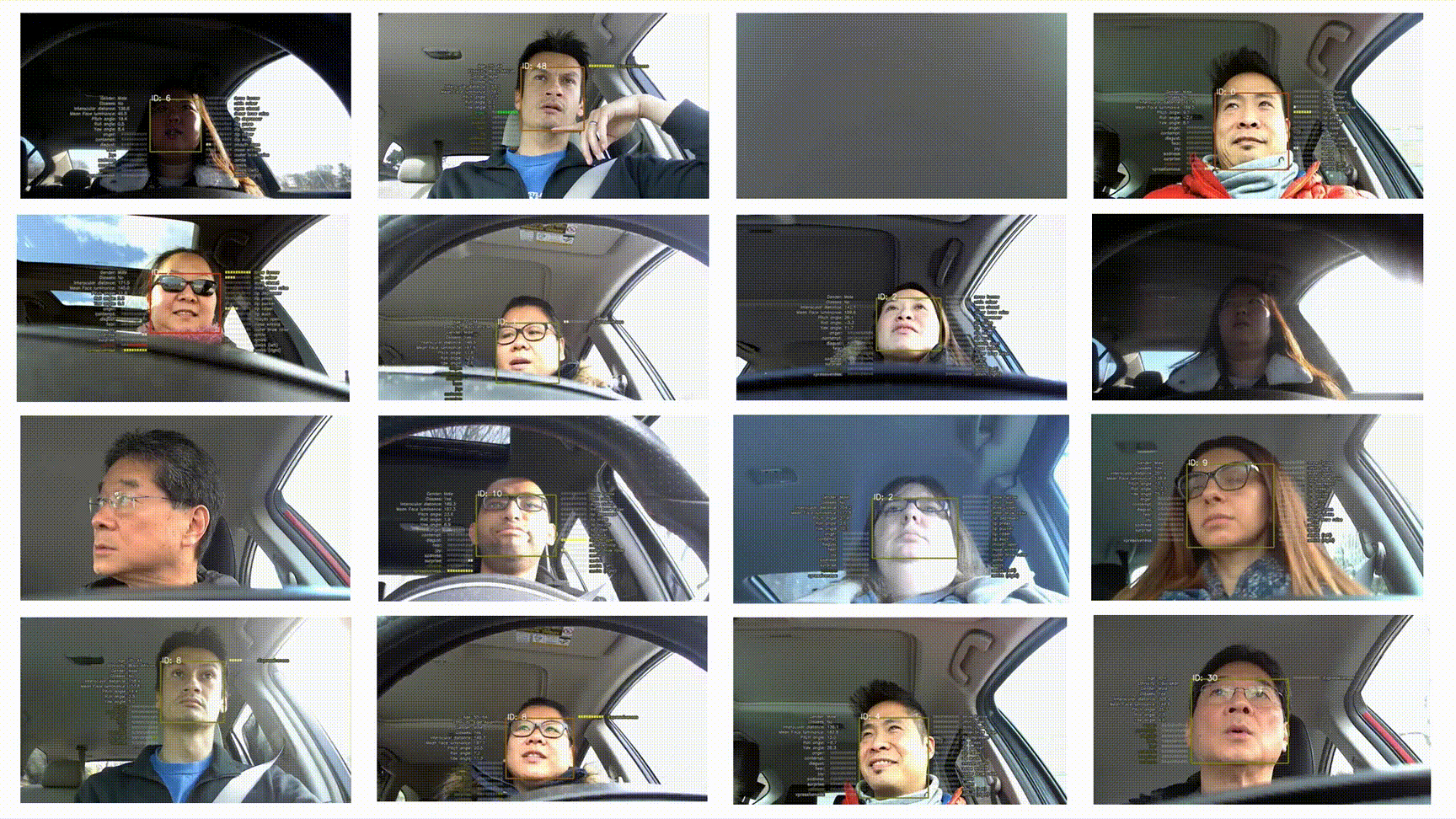

To get the ball rolling, Affectiva initially conducted its own tests, using a wide variety of ethnicities and age ranges. Data was collected in two different phases. During the first phase data was collected internally from team members who were asked to drive a car with a camera that would capture different scenarios with ‘posed’ expressions. This included a set of six fairly typical expressions, such as looking over the shoulder and checking a phone. The second collection technique was more free form, in order to capture more random behavior.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

However, as you can imagine, there are many, many different variables involved in monitoring driver behaviour. Affectiva points out that interior lighting conditions can change, with window type and the way light enters the vehicle creating myriad variations. Pose changes from the driver have also presented a challenge because drivers aren’t always just sitting nicely in their seats.

On top of that, Affectiva also had to factor in people wearing hats of all kinds, sunglasses and also figure out how to get around the issue of occupants touching their faces while driving. In short, the data collection issue has been a mountain to climb.

Interestingly, the data collection process has also revealed just how different people can be, with Affectiva noting that some individuals were very expressive behind the wheel. Others proved to be pretty neutral as they made their way from A to B. One issue was commonplace though – everyone got distracted from time to time. According to data from a US government website, every day over 1,000 injuries and nine fatalities are caused by driver distraction in America. So the need to develop smarter systems with the help of AI and machine learning can’t come soon enough.

Next-generation assistants

Nuance says its Dragon Drive is now in more than 200 million cars on the road today across more than 40 languages. The company has also tailored fully branded experiences for the likes of Audi, BMW, Daimler, Fiat, Ford, GM, Hyundai, SAIC, Toyota. Powered by conversational AI, Dragon Drive enables the in-car assistant to interact with passengers based on both verbal and non-verbal modalities, including gesture, touch, gaze detection, voice recognition powered by natural language understanding (NLU). And, by working with Affectiva, it will soon also offer emotion and cognitive state detection.

“As our OEM partners look to build the next generation of automotive assistants for the future of connected and autonomous cars, integration of additional modes of interaction will be essential not just for effectiveness and efficiency, but also safety,” said Stefan Ortmanns, executive vice president and general manager at Nuance Automotive. “Leveraging Affectiva’s technology to recognise and analyse the driver’s emotional state will further humanise the automotive assistant experience, transforming the in-car HMI and forging a stronger connection between the driver and the OEM’s brand.”

“We’re seeing a significant shift in the way that people today want to interact with technology, whether that’s a virtual assistant in their homes, or an assistant in their cars,” said Dr. Rana el Kaliouby, CEO and co-founder of Affectiva.

“OEMs and Tier 1 suppliers can now address that desire by deploying automotive assistants that are highly relatable, intelligent and able to emulate the way that people interact with one another. This presents a significant opportunity for them to differentiate their offerings from the competition in the short-term, and plan for consumer expectations that will continue to shift over time. We’re thrilled to be partnering with Nuance to build the next-generation of HMIs and conversational assistants that will have significant impacts on road safety and the transportation experience in the years to come.”

It sounds like an exciting development and one that could make a big difference. For example, Nuance says that if an automotive assistant detects that a driver is happy based on their tone of voice, the system can mirror that emotional state in its responses and recommendations. What’s more, with semi-autonomous vehicles likely to become increasingly common on our roads, the assistant may take action by taking over control of the vehicle if a driver is exhibiting signs of physical or mental distraction. There’s still a lot of work to be done, but smarter cars are moving in the right direction.

Rob Clymo has been a tech journalist for more years than he can actually remember, having started out in the wacky world of print magazines before discovering the power of the internet. Since he's been all-digital he has run the Innovation channel during a few years at Microsoft as well as turning out regular news, reviews, features and other content for the likes of TechRadar, TechRadar Pro, Tom's Guide, Fit&Well, Gizmodo, Shortlist, Automotive Interiors World, Automotive Testing Technology International, Future of Transportation and Electric & Hybrid Vehicle Technology International. In the rare moments he's not working he's usually out and about on one of numerous e-bikes in his collection.