Nvidia just made a huge leap in supercomputing power

More than 50 servers to be powered by new Nvidia A100 GPU

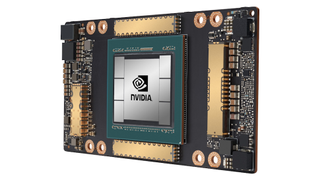

Nvidia has unveiled a host of servers powered by its new A100 GPUs, designed to accelerate developments in the fields of AI, data science and supercomputing

The servers are built by some of the world’s most prominent manufacturers - including Cisco, Dell Technologies, HPE and more - and are expected to number more than 50 by the end of the year.

First revealed in May, the A100 is the first GPU based on the Nvidia Ampere architecture and can push compute power by up to 20x in comparison to its predecessor, representing the company’s most dramatic performance leap to date.

- We've built a list of the best cloud computing services around

- Here's our list of the best business laptops on the market

- Check out our list of the best cloud brokers out there

The announcement could prove significant for organizations that run compute intensive workloads, such as machine learning or computational chemistry, whose research projects could be vastly accelerated.

Nvidia A100 servers

The A100 boasts a number of next-generation features, including the ability to partition the GPU into up to seven distinct GPUs that can be allocated to different compute tasks. According to Nvidia, new structural sparsity capabilities can also be used to double a GPUs performance.

Nvidia NVLink technology, meanwhile, can group together multiple A100s into a single massive GPU, with significant implications for organizations that rely on sheer computing power.

Up to 30 A100-powered systems are expected to become available this summer, with roughly 20 more by the end of 2020.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

“Adoption of Nvidia A100 GPUs into leading server manufacturers’ offerings is outpacing anything we’ve previously seen,” said Ian Buck, Vice President and General Manager of Accelerated Computing at Nvidia.

“The sheer breadth of Nvidia A100 servers coming from our partners ensures that customers can choose the very best options to accelerate their datacenters for high utilization and low total cost of ownership,” he added.

The company also announced it has outstripped the record for running big data analytics benchmark TPCx-BB, using 16 DGX A100 systems (powered by a total of 128 A100 GPUs). It took Nvidia just 14.5 minutes to run the benchmark, versus the previous record of 4.7 hours, meaning the firm improved upon the previous record by nearly 20x.

- Here's our list of the best mobile workstations on the market

Joel Khalili is the News and Features Editor at TechRadar Pro, covering cybersecurity, data privacy, cloud, AI, blockchain, internet infrastructure, 5G, data storage and computing. He's responsible for curating our news content, as well as commissioning and producing features on the technologies that are transforming the way the world does business.

Most Popular