Taking compute performance to the next level with cold computing

The most demanding systems require the best cooling

When it comes to computing, hardware manufacturers are always looking for new ways to keep their chips running at lower temperatures. This lets them get even more performance using the same chips while using less energy and producing even less heat.

While keeping a laptop or desktop cool proves no challenge, cooling larger systems such as data centres and supercomputers generally proves more difficult. TechRadar Pro spoke with Rambus’ Chief Scentist Craig Hampel to learn more about cold computing and how researchers are using this technique today.

1. What is cold computing?

Generally speaking, cold computing is the idea of decreasing the operating temperature of a computing system to increase its computational efficiency, energy efficiency or density. The most significant impact occurs when you run computing systems at cryogenic temperatures. To give you an idea of what this looks like – conventional processor and memory based data centres operate at temperatures well above room temperature, at around 295k (21 C), but we’re looking at operating memory systems in liquid nitrogen at 77K (-250 C).

Over the last few decades, we have seen tremendous improvements in the performance and energy efficiency of computing and memory systems. With the rise in cloud computing, mobile devices and data volume, the demand for large data centres and supercomputers is continuing to grow as a result.

However, as outlined by Intelligence Advanced Research Projects Activity (IARPA), power and cooling for large-scale computing systems and data centres is becoming increasingly unmanageable. Conventional computing systems, which are based on complementary metal-oxide-semiconductor (CMOS) switching devices and normal metal interconnects, are struggling to keep up with increasing demands for faster and more dense computational power in an energy efficient way.

Historically, Dennard scaling has facilitated more dense and energy efficient memory and computational system but Dennard scaling has slowed dramatically. This is where cold computing can have significant impact, enabling organisations to build higher performance computers that use less power and at a low cost, all by reducing the temperature of the system.

2. Is it a new concept?

There has actually been interest in cold computing for several decades, with an example of early experimentation in the 1990s at IBM in a group including Gary Bronner when he was working at IBM before joining Rambus. While this work showed significant potential, it became evident that traditional CMOS scaling was able to keep pace with industry requirements.

In this research, Bronner and his colleagues found that low temperate DRAMs operated three times faster than conventional DRAM.

Since then, cold computing research has continued to develop and we’ve seen a lot of discussion around quantum computers too, which is at the extreme ends of cold computing. However, most quantum machines need a conventional error correction processor near them, so it is likely that machines operating between 77K and 4K (-195C) will be necessary before quantum computers come into use.

3. Who is working on it?

Currently, there are multiple research projects around cold computing and cold memory as well as quantum computing. The studies are showing promising progress of high speed systems capable of processing and analysing large volumes of data at substantially better energy efficiency.

We are doing work relating to DRAM and memory systems, but the usual suspects of the tech industry are behind it, too – Intel, IBM, Google and Microsoft. We’re also seeing governments around the world looking into this. In the US, IARPA is working on something called the Cryogenic Computing Complexity (C3) initiative, which is looking to establish superconducting computing as a long-term solution to the power problem and a successor to conventional temperature CMOS for high performance computing (HPC).

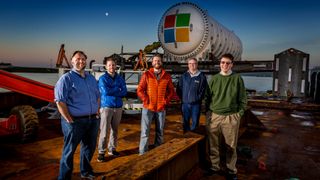

Currently, a practical example of cold operation is Microsoft's Project Natick, where a portion of a data centre was sunk off the coast of Scotland's Orkney Islands. This is likely to be the first of many projects to find ways to advance processing power, while efficiently and sustainably powering data centres at a lower cost.

However, this is just scratching the surface of the opportunity. We are currently working with Microsoft to build memory systems as part of their effort to build a quantum computer. Since quantum processors have to operate at cryogenic temperatures – below 93.15k (-180°C), we are researching various methods to optimise memory interfaces and DRAM, including signalling interfaces that communicate efficiently at cryogenic temperatures.

4. What will cold computing offer the industry that conventional approaches cannot deliver?

Our need for more dense and energy efficient computing means that developments will likely extend beyond conventional semiconductors to Cryogenic operation and eventually include, superconductors and quantum mechanics. While Moore’s Law will slow as conventional data centers at room temperature become obsolete, cold computing could expand computing capacity exponentially, making superconducting and quantum computing the future of supercomputers and HPC.

5. Will cold computing drive up power consumption and costs?

At 77k we believe we can get DRAM operating voltages down to between 0.4 to 0.6V, meaning substantially less power consumption; and at this temperature and voltage, the leakage goes away, so we hope to get perhaps four to ten additional years of scaling in memory performance and power. Cooling systems however will become more expensive and require more power to maintain temperatures and remove heat than conventional air-cooled systems. It’s a classic engineering trade off to optimize this system and achieve total power savings. We believe that after optimizing this cold computing system, power savings on the order of two order of magnitude may be possible.

6. What challenges need to be overcome to make cold computing practical?

Getting circuits to perform at these temperatures requires more engineering work before the technology can become practical. At 77k temperatures digital functions translate well, but the problems come with the analog functions which don’t work as they used to. So, analog and mixed signal parts of circuits may need to be redesigned in order to operate at cryogenic temperatures.

Currently, the development of logic functions using superconducting switches is in its early stages. While there is significant research being conducted, there is still a lot of work to be done. But here’s the good news – once a standard set of logic functions is defined, translating processor architectures and the software that runs on them should be fairly straightforward.

Eventually, cold computing will bridge the gap to quantum computing. But even then, there are significant challenges to quantum computing hardware and programming. Companies working on these projects are bringing together the smartest quantum physicists and computer architects to tackle these challenges. Even with the world’s best, these challenges still need additional breakthroughs before proving their potential. Demonstrating that a quantum machine is better at doing a specific task than a conventional one, a challenge known as ‘quantum supremacy’, is yet to be achieved.

7. When will this technology be practical?

Currently, there aren’t any same-temperature memory technologies to complement quantum computers. Although potential memory technologies are in the early stages of development, it will take years for them to reach the cost per bit and capacity capabilities of current semiconductor memory. It will become necessary to utilize conventional CMOS and conventional DRAM that is optimized for cryogenic temperature to bridge the gap to superconducting and quantum computing.

We’re currently building prototypes of 77K memory systems, which with continued testing and optimization, we believe could go to market in as little as three to five years’ time.

Craig Hampel, Chief Scientist at Rambus

- We've also highlighted the best cloud computing services

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Most Popular