Why DeepMind's triumph over humanity should both excite and terrify you

Artificial superintelligence means immortality or extinction, probably this century

It's over. Mankind has been defeated by the very robots it helped create. Over the past week, an artificial intelligence took on humanity's greatest champion and returned victorious.

In this case, the battlefield was the ancient Chinese game of Go rather than a muddy meadow. But the result could end up being more consequential for humanity than any previous battle in our history.

Go For It

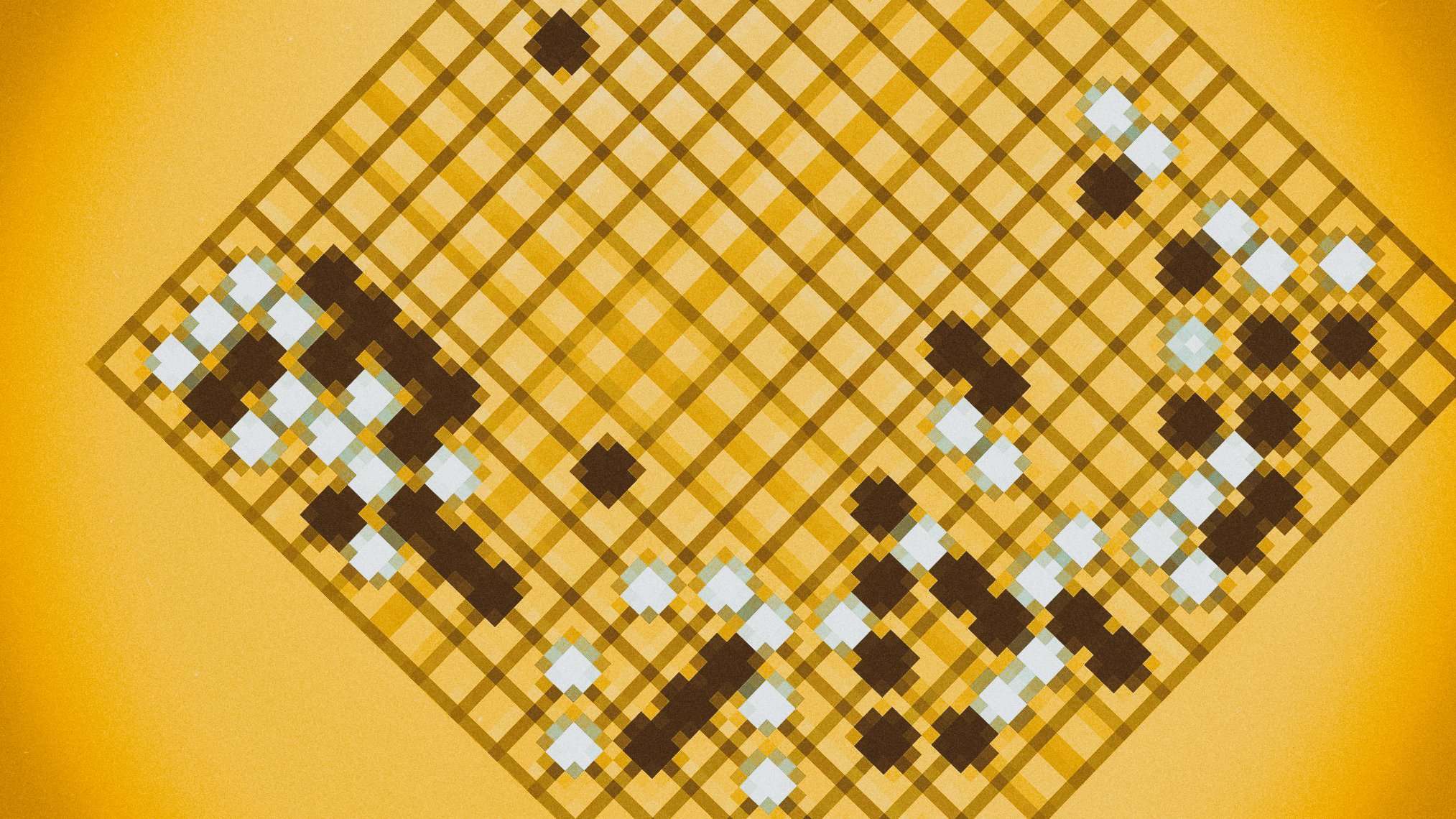

The AI in question is AlphaGo, a neural network that employs a combination of machine learning and tree search techniques, combined with extensive experience of both human and computer play. It was created by DeepMind, a British firm that was acquired by Google in 2014.

Go, as you've probably learnt if you've been following the story, is much harder for computers to tackle than other games like chess. The many more possible outcomes of each move make it prohibitively difficult to use traditional AI methods to win, because it takes so long to run through all the possibilities.

It's so hard, in fact, that most AI experts thought it'd be five to ten years before computers could beat the best human players. Even AlphaGo's creators were surprised by its victory, with DeepMind co-founder Demis Hassabis telling reporters he was "stunned and speechless" at the press conference following the first match in the best-of-five series.

The Last Milestone Of Its Kind

The win, which follows AI dominance of games like chess and draughts over the past few decades, is probably the last milestone of its kind. Murray Campbell, an IBM researcher who worked on the computer that beat Gary Kasparov at chess in 1997, told the LA Times: "In a way, it's the end of an era. They've shown that board games are more or less done and it's time to move on."

So what's next? Hassabis shared a little of his plans for DeepMind in an interview with The Verge, saying that the next frontier is likely to be videogames. AlphaGo's victory played out in South Korea, where StarCraft is insanely popular - a game that humans are far better at than computers. "Strategy games require a high level of strategic capability in an imperfect information world," said Hassabis.

Get daily insight, inspiration and deals in your inbox

Sign up for breaking news, reviews, opinion, top tech deals, and more.

But he added that games are just a testbed for bigger, real-world problems. "The aim of DeepMind is not just to beat games, fun and exciting though that is," Hassabis said.

"We're concentrating on the moment on things like healthcare and recommendation systems." Longer-term, DeepMind's learning-based approach could yield so much more. "I think it'd be cool if one day an AI was involved in finding a new particle," he added.

Endgame For Humanity

AlphaGo represents what experts call "narrow" or "weak" AI. It can rival humans at a single task, but can't hope to compete in others. That's distinct from "strong" or "general" AI, which has a breadth of intelligence similar to humans and is the one that tends to crop up in science fiction. The former is, obviously, a necessary stepping stone on the path to the latter.

Yet artificial general intelligence terrifies the people who know the most about it. Stephen Hawking and Elon Musk were among a group of very smart people who signed an open letter in January 2015 calling for more research into the societal implications of the further development of AI. Musk formed a nonprofit AI research firm called OpenAI in December 2015 for that very reason.

They're terrified because they see the end of humanity in the middle distance. Once human-level artificial general intelligence has been attained, there's essentially no chance of halting development. The AI would almost certainly begin working on improving itself, and our fleshy brains would be rapidly outpaced by our digital creation.

Meanwhile, we'd increasingly have difficulty understanding the AI, in the same way that human motives seem incomprehensible to our pets. "A super-intelligent AI will be extremely good at accomplishing its goals," Hawking warned in a recent AMA on Reddit, "and if those goals aren't aligned with ours, we're in trouble."

Tellingly, when DeepMind agreed to the Google acquisition, it demanded that the search giant set up an AI safety and ethics review board to ensure the technology is developed safely. The board will devise rules for how Google can and can't use AI.

What Is Intelligence, Anyway?

In an interview in 2011, DeepMind co-founder Shane Legg said that given no major wars or disasters, and beneficial political and economic development, there was a 50 percent chance of human-level artificial general intelligence being reached by 2028 (the median response among AI experts is 2040).

"When a machine can learn to play a really wide range of games from perceptual stream input and output, and transfer understanding across games, I think we'll be getting close," he said.

Yet he identified major roadblocks still to come. "At the moment we don't agree on what intelligence is or how to measure it," he said. "How do we make something safe when we don't properly understand what that something is or how it will work?"

"Eventually, I think human extinction will probably occur, and technology will likely play a part in this. It's my number one risk for this century."